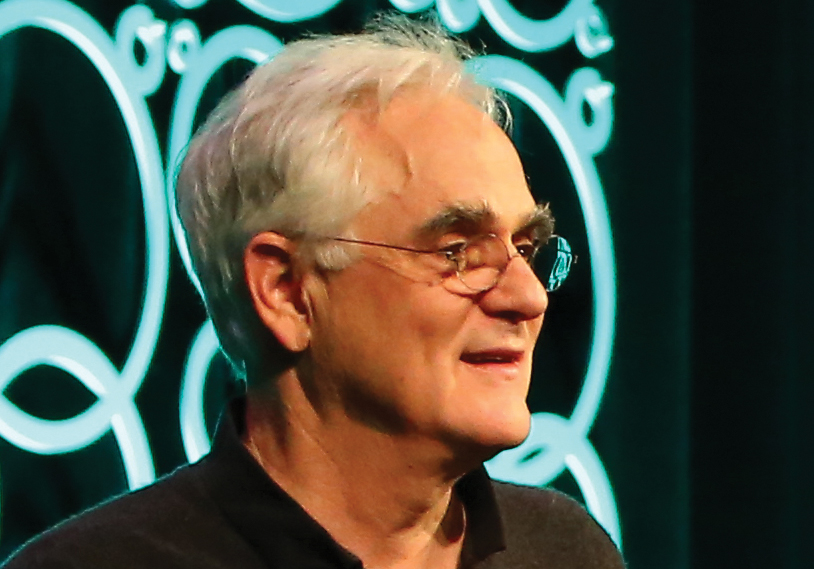

Cognitive Crossroads: Jonathan D. Cohen Tackles the Mysteries of Multitasking

Why is it so difficult to concentrate on two things at once? Despite decades of research, psychological scientists aren’t quite sure.

During his APS William James Fellow Award Address at the 2018 APS Annual Convention in San Francisco, Jonathan D. Cohen of Princeton University discussed exciting evidence that he has brought to bear on the vexing mysteries of multitasking.

For Cohen, multitasking is intertwined with humans’ powerful ability to exercise cognitive control. Our species has a unique capacity to “guide behavior in accord with internally represented goals or intentions,” particularly when doing so “involves overcoming otherwise compelling response tendencies.” For example, when humans have an urge to scratch an itch, a compelling response tendency, they can use cognitive control to stop themselves from scratching. This ability isn’t found among any of our fellow animals, at least not without extensive training. Yet people can do it with a single instruction.

Despite our incredible ability to use cognitive control, it is well known that people in many situations perform poorly when they attempt to simultaneously complete two tasks that both require mental concentration. Paradoxically, in daily life, feats of multitasking abound: To drive a car, one must monitor road conditions, approaching vehicles, speed, and sometimes revolutions per minute. Skilled drivers may be able to stay abreast of all of these factors while listening to a podcast, minding the behavior of young passengers, or even abstaining from scratching an itch. Yet under other circumstances — for example, if the road conditions deteriorate — multitasking becomes challenging even for experienced drivers.

Early work on cognitive control contrasted it with automatic mental processes that happen so naturally they can be difficult to repress. People who know how to read, for instance, have a hard time ignoring written words’ meaning, as demonstrated by the Stroop task (see Posner & Snyder, 1975). As kindergarten teachers and driving instructors can attest, automatic processes such as reading and driving don’t start out as automatic. It can take painstaking practice for controlled processes to become automatic ones.

Beginning in the 1970s, many psychological scientists used the analogy of a computer’s central processing unit (CPU) to explain the “seriality constraint” that makes it so hard to do two attention-intensive tasks at once. Cohen, however, is skeptical of this CPU analogy. After all, the human brain’s prefrontal cortex contains approximately 30 billion neurons. “Thirty billion cores, and you can’t do two two-digit arithmetic problems at the same time?” Cohen asked in San Francisco. “That just doesn’t seem right.”

What if, Cohen began to wonder, cognitive control isn’t a limitation on the brain’s processing capacity but rather a way to harness the power of shared cognitive representations that are necessary for making sense of complex information? Take the Stroop task. When we see the word red printed in yellow ink, we’re only seeing one object, but that one thing has two purposes: It conveys information about both a color and a word. To sort out all this data, the brain needs a control signal that helps us decide which possible representation — linguistic or chromatic — we need to use. If we gave both the linguistic and chromatic representation the “go ahead” at the same time, we might say “rellow” or “yed” in response to a Stroop item. Could cognitive control be akin to a traffic signal that referees activities in the human mind, preventing “accidents” from occurring when mental processes intersect with one another?

Cohen became convinced that the question of how these conceptual crossings affect traffic flow in the brain was key to understanding cognitive control. Previous models rooted in a multiple-resources theory of attention — including APS Fellow David E. Meyer and David E. Kieras’s EPIC model and Niels A. Taatgen and Dario D. Salvucci’s threaded cognition model — had already begun to address this question. Nonetheless, in Cohen’s estimation, these models left crucial questions unanswered: How does the number of crossings, or the frequency of sharing of representations in psychological terms, affect the overall parallel-processing capacity of the system or the demands for control? How does that scale with network size? And, perhaps most importantly, if such crossings pose problems, why do they exist? Why doesn’t the brain build the equivalent of overpasses and underpasses that allow different activities to occur without interfering with one another?

Using computer modeling, Cohen and his team constructed networks that simulated simultaneous decision-making processes under conditions where a reward would motivate the decision-maker to choose the correct option. The team designed their model to include shared representations such as the ones that Cohen believed might hypothetically exist in the brain. Introducing 20% overlap among the processes responsible for decision-making — a figure that Cohen considers a reasonable estimate of pathway overlap in the human brain based on our current understanding of it — radically limited the model’s capacity to multitask.

“Once you get to about 20% overlap,” Cohen found, “it doesn’t matter how big the network is … There’s a fixed number of maximum processes that [the network] can do.” Cohen explained, “A network of 60 or 1,000 or by extension a million or a hundred billion … can really only do about 10 to 15 things at once in order to maximize reward.” Adjusting some of the model’s underlying assumptions could, by Cohen’s estimation, reduce the system’s multitasking capacity to even fewer concurrent tasks, perhaps as few as one or two at a time — a conundrum familiar to many of us who struggle to multitask. Cohen asserted that cognitive control is not responsible for this problem; rather, it is there to manage it, ensuring that for tasks that share representations, only ones that won’t interfere with each other are executed at one time. Saying that the capacity for cognitive control is limited is like blaming firemen for the fire: Although they are often seen at the scene of a fire, they are not responsible for it; they are there to put it out.

If Cohen is correct that sharing representations among tasks restricts multitasking severely, and requires cognitive control to manage it, why should such shared representations exist in the first place? Wouldn’t it make sense for evolution to endow us with a more efficient information-processing network? Of course much of the brain is in fact more like that, allowing us to multitask in many situations.

Cohen suggested during his award address that sharing mental representation between tasks actually creates important cognitive advantages: Shared representations help us learn faster, more efficiently, and with greater flexibility. In computer science, cutting-edge deep learning techniques rely on shared representations to do what more traditional algorithms can’t, namely allowing programs to generalize and categorize according to nuanced similarities between subjects.

It isn’t a perfect system. Cohen used a Stroop-style task to show the audience that, while shared representations allow us to learn novel tasks quickly, they can sometimes trip us up. When asked to point left upon seeing the word red and right upon seeing the word green (a novel task) at the same time as naming the color in which the word was written (which didn’t match the color named by the word itself), the audience struggled. In this situation, the audience was being asked to do two tasks that, in principle, it should be possible to do at the same time — verbally respond to the color and manually respond to the word. However, processing the word uses the same representations that are used to name it (that is, respond to it verbally), which interferes with the verbal response to the color.

This problem would have been avoided if, in learning the manual response to the word, different representations were used than for naming it. Such a dedicated representation would have taken time to develop (a process not unlike the time-consuming work of learning to read, drive, or use sheet music to play a piano concerto). So, for Cohen’s audience, sharing the representations of words allowed a new task to be learned quickly but caused interference with another task (color naming). Cognitive control “is there to solve that problem,” Cohen said, like a traffic signal that says, “Don’t try and do these two things at once, or you’re going to get in trouble.”

Understanding the close relationship between cognitive control and shared representations, Cohen said, has implications for other problems in psychological science and allows for a more nuanced understanding of the differences between controlled and automatic processing, the trajectory by which controlled processes become automatic, and the means by which old knowledge can interfere with new learning. And, of course, it brings psychological researchers one step closer to understanding why an organ as powerful as the human brain is so darned bad at doing two things at once.

Reference

Posner, M. I., & Snyder, C. R. R. (1975). Attention and cognitive control. In R. Solso (Ed.), Information processing and cognition: The Loyola Symposium. Hillsdale, NJ: Erlbaum.

Comments

I love psychological science.

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.