Practice

Methods: A Little Help to “Self-Correction”—Enhancing Science After Replications

Psychological science’s so-called “replication crisis” refers to the many landmark research findings that other researchers have been unable to demonstrate in close replications of the original studies. This “crisis” is not unique to psychological science—for example, 89% of landmark findings in preclinical cancer research were not replicated when studied with new samples. Moreover, psychological scientists have developed procedures and invested in practices to enhance scientific transparency and replicability (e.g., open-science practices). But one question that remains to be evaluated is what happens when the results of a study are not replicated: How do the new studies impact the citations of the original research? Is psychological science self-correcting?

In a 2022 article in Perspectives on Psychological Science, Paul T. von Hippel (The University of Texas at Austin) examines how replication affects the influence of original scientific research and makes several recommendations to increase the influence of replication efforts and advance scientific progress in psychological science.

Nonreplicable results challenge scientific progress

“The prevalence of nonreplicable results poses two challenges to scientific progress,” explains von Hippel. To address the challenge of questionable research practices, researchers have established practices that facilitate sharing data and protocols and that increase research transparency (e.g., through the OSF, previously known as Open Science Framework). Another challenge involves tempering the influence of nonreplicated results and ensuring that nonreplicated findings are not cited or perceived as established findings. “If a large fraction of published findings are not trustworthy, then new scientists may be something like explorers setting off to survey the Kong Mountains or the coast of New South Greenland—discoveries claimed by early explorers and copied for decades from one map to another until later explorers, after wasting time on futile expeditions, convinced cartographers that there was nothing there,” von Hippel writes.

Recognizing and encouraging replication efforts would also influence the credibility that future investigators ascribe to the original studies. “A single replication failure does not necessarily make the original finding false, but if replication studies carry some weight in the research community, then an unsuccessful replication attempt should reduce faith in the finding’s veracity or generalizability, whereas a successful replication attempt should bolster the original finding,” von Hippel elaborates.

Check out this feature on the emerging culture of research transparency.

Unsuccessful replications deserve more influence

To measure the impact of replication studies in psychology, von Hippel examined their effect on citations. Did the original studies’ citations decrease after unsuccessful replications? Were the replications cited alongside the original studies?

Von Hippel analyzed the citation history of 98 articles, originally published in 2008, that were then subjected to replication attempts published in 2015. Relative to successful replications, failed replications reduced the original studies’ citations by only 5% to 9% on average. Of the articles citing the original studies, fewer than 3% cited the replication attempt. These changes are statistically not different from zero, indicating that although replication efforts exist, they do not seem to largely affect the literature.

These findings “suggest that many replication studies carry far less weight than advocates for scientific self-correction might hope,” writes von Hippel. One factor contributing to this lack of influence is the inherent bias of the search engines that researchers use (e.g., Google Scholar), which prioritize studies with many past citations and assign importance based on how “classic” a study is. Thus, new replication studies are ranked lower than the original studies by any search engine, diminishing their visibility.

Practical recommendations to increase the influence of replications

Von Hippel makes several practical recommendations to increase the influence of replications and accelerate science’s potential to self-correct.

- Require authors to cite replication studies alongside individual findings. This “would force authors and readers to better acknowledge the weight of evidence on a given topic [and] increase incentives to conduct replications by making it harder to ignore replication studies and increasing the number of times that they get cited,” explains von Hippel.

- Enhance reference databases and search engines to give higher priority to replication studies. “Search engines could be modified to identify replication studies by text mining, and authors of replication studies could use tags to make their contributions more discoverable.”

- Create databases of replication studies and tools to automatically check bibliographies for replications of cited studies. For example, Retraction Watch (http://retractiondatabase.org/RetractionSearch.aspx) and OpenRetractions.com maintain databases of retracted studies, and new automated tools to check bibliographies for retracted items are being created (e.g., Zotero, 2019). A similar approach could be used to increase the discoverability of overlooked replication studies, von Hippel proposes.

Related content we think you’ll enjoy

-

Perspectives on Psychological Science

Perspectives on Psychological Science is a bimonthly journal publishing an eclectic mix of provocative reports and articles, including broad integrative reviews, overviews of research programs, meta-analyses, theoretical statements, and articles on topics such as the philosophy of science, opinion pieces about major issues in the field, autobiographical reflections of senior members of the field, and even occasional humorous essays and sketches. Visit Page

-

Open Science and Methodology

TOP Guidelines APS is a signatory to the Transparency and Openness Promotion (TOP) Guidelines, which include eight modular standards, each with three levels of increasing stringency, to promote open science practices in publishing. APS committed Visit Page

-

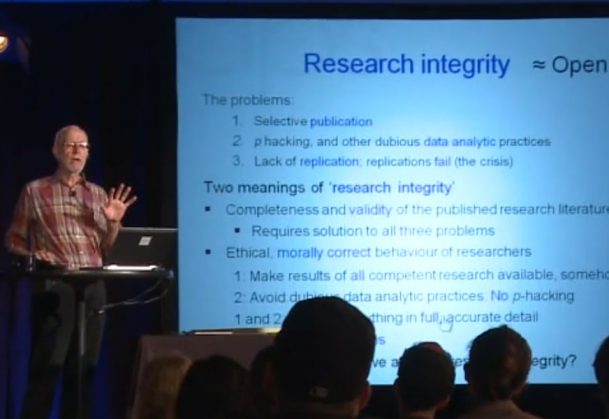

The New Statistics: Estimation and Research Integrity

Featuring Geoff Cumming La Trobe University, Australia Leading scholars in psychology and other disciplines are striving to help scientists enhance the way they conduct, analyze, and report their research. They advocate the use of “the Visit Page

Feedback on this article? Email apsobserver@psychologicalscience.org or login to comment.

References

von Hippel, P. T. (2022). Is psychological science self-correcting? Citations before and after successful and failed replications. Perspectives on Psychological Science. https://doi.org/10.1177/17456916211072525

Zotero. (2019, June 14). Retracted item notifications with Retraction Watch integration. https://www.zotero.org/blog/retracted-item-notifications

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.