Practice

Methods: Helping Nonscientists Differentiate Preprints From Peer-Reviewed Research

The push for rapid dissemination of scientific findings in psychological science and other fields has fueled the ubiquity of preprints—scientific manuscripts that researchers make available before the formal peer-review process. The COVID-19 pandemic accelerated the availability of preprints, which can help researchers get credit for discoveries and allow other researchers to comment, leading to improvements before submission to scientific journals. But the consequences of preprints aren’t always positive. Issues arise when preprints are available to a general public that might not be able to differentiate between preprints and peer-reviewed articles.

In a 2022 article in Advances in Methods and Practices in Psychological Science, Tobias Wingen (University of Cologne), Jana B. Berkessel (University of Mannheim), and Simone Dohle (University of Cologne) examined some of the negative consequences that arise when people equate preprints with peer-reviewed scientific evidence. The researchers also tested a way to help nonscientists differentiate between preprints and peer-reviewed journal articles.

Preprints and misinformation

Although preprints may inform the public and accelerate science, they may also mislead. For example, a now-retracted preprint published during the COVID-19 crisis that described similarities between the SARS-CoV-2 and HIV viruses was picked up by social and traditional media, where it fueled speculation that the coronavirus was a genetically engineered bioweapon (Koerber, 2021). This idea later became one of the leading conspiracy theories about the coronavirus (Imhoff & Lamberty, 2020).

This is one of many examples in which findings reported in a preprint influenced how the public thought about a scientific topic. Collectively, they illustrate researchers’ concern about “members of the general public treating non-peer-reviewed preprints as established evidence, leading to ill-advised decisions, and potentially damaging public trust in science,” Wingen and his colleagues explained.

How do nonscientists perceive preprints?

Preprints are often presented with no or very little accompanying information regarding their status as preprints, which makes it difficult (or impossible) for nonscientists to distinguish them from peer-reviewed articles. “This is because [nonscientists] lack the necessary background knowledge that preprints are not peer reviewed. We hypothesize that without an additional explanation of preprints and their lack of peer review, people will perceive research findings from preprints as equally credible compared with research findings from the peer-reviewed literature,” wrote Wingen and his colleagues.

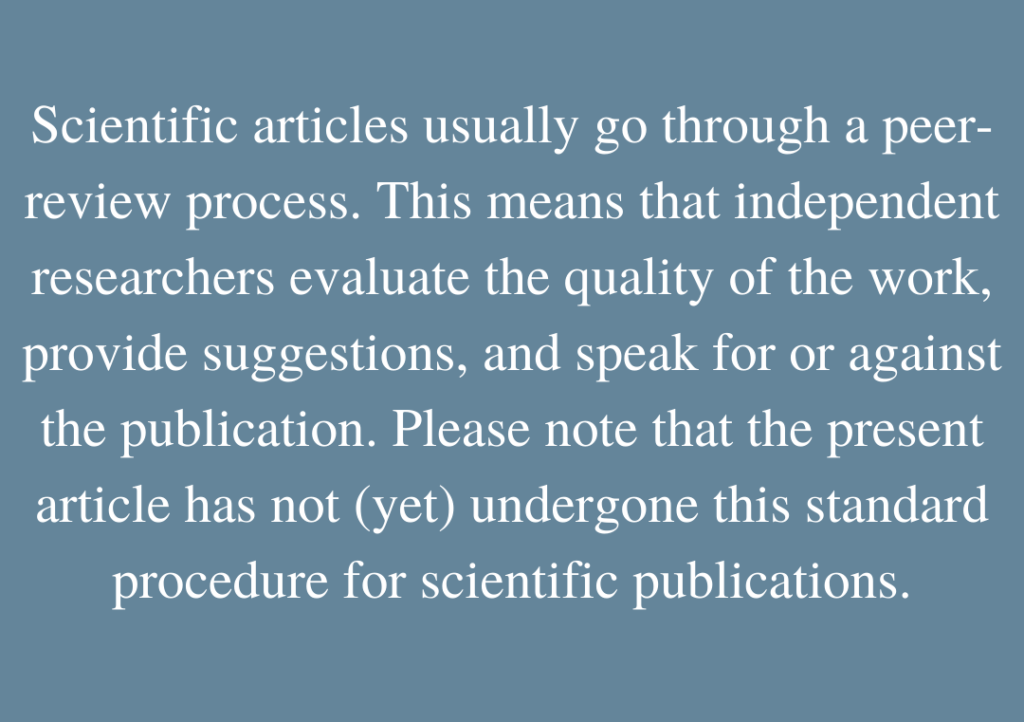

In two experiments with German and American samples, Wingen and colleagues presented preprints and peer-reviewed research findings, noting that participants saw preprints and peer-reviewed findings as equally credible. However, in two other studies, the researchers showed that adding an explanation of preprints and the peer-review process (see Figure 1) led German participants to rate research findings from preprints as less credible than research findings from peer-reviewed articles.

Why are preprints indistinguishable from peer-reviewed articles?

The findings reported by Wingen and colleagues indicate that nonscientists may indeed perceive preprints to be as credible as peer-reviewed articles. But they also suggest that this might be the case because nonscientists do not realize that preprints lack a quality-control process. By extension, adding a brief message about the concept of preprint and the peer-review process may be enough to reduce an overreliance on preprint findings among the public.

In a fifth study, Wingen and colleagues further tested whether the source of the message accompanying a preprint influenced its perceived credibility among nonscientists. The researchers developed a shorter explanation (see Figure 1) and indicated whether this message was provided by the authors of the preprint or by an external source. Results indicated that regardless of the source of the explanation, participants judged the findings in the preprint to be less credible than the findings in the peer-reviewed article. Surprisingly, the same effect occurred when the preprint was accompanied only by a statement saying that preprints are not peer-reviewed.

To further explore why adding a message to a preprint can lower the perceived credibility of its findings, Wingen and colleagues also asked participants to report their perception of the preprints’ quality control and adherence to scientific publication standards. Compared with participants who only saw a statement saying that preprints are not peer-reviewed, those who received a brief explanation reported lower perceived quality control and lower perceived adherence to scientific publication standards. Further analyses indicated that the explanation was more effective for participants who indicated they were more familiar with the scientific publication process.

How to help nonscientists differentiate preprint from peer-reviewed findings

These findings suggest that adding a brief message to preprints explaining the peer-review process and disclosing that these articles have not been peer-reviewed is an effective and effortless way to alert readers who are unfamiliar with scientific publishing to the differences between scientific findings that have and have not been checked for quality.

However, Wingen and colleagues reported that fewer than 30% of preprints available in two popular psychological preprint servers (OSF Preprints and PsyArXiv) contained such a message. As a result, to lay readers, the majority of preprints may be indistinguishable from peer-reviewed articles.

“Preprint authors, preprint servers, and other relevant institutions can likely mitigate this problem by briefly explaining the concept of preprints and their lack of peer review,” Wingen and colleagues concluded. “This would allow harvesting the benefits of preprints, such as faster and more accessible science communication, while reducing concerns about public overconfidence in the presented findings.”

Feedback on this article? Email apsobserver@psychologicalscience.org or scroll down to comment.

References

Imhoff, R., & Lamberty, P. (2020). A bioweapon or a hoax? The link between distinct conspiracy beliefs about the Coronavirus disease (COVID-19) outbreak and pandemic behavior. Social Psychological and Personality Science, 11(8), 1110–1118. https://doi.org/10.1177/1948550620934692

Koerber, A. (2021). Is it fake news or is it open science? Science communication in the COVID-19 pandemic. Journal of Business and Technical Communication, 35(1), 22–27. https://doi.org/10.1177/1050651920958506

Wingen, T., Berkessel, J. B., & Dohle, S. (2022). Caution, preprint! Brief explanations allow nonscientists to differentiate between preprints and peer-reviewed journal articles. Advances in Methods and Practices in Psychological Science, 5(1). https://doi.org/10.1177/25152459211070559

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.