Featured

Kids in Their Comfort Zones

Conducting Online Developmental Research with Lookit

In the field of adult psychology, online testing has sped up and democratized data collection, expanded the scope of questions we can answer, and provided a new form of “convenience” sampling that’s far more representative than relying on undergraduates or even the volunteers who respond to local ads. Research with children stands to benefit even more, given the disproportionate difficulty of bringing them into the lab, yet developmental psychology has been slower to embrace online testing, largely because of technical hurdles and the particular demands of our discipline.

Online research could help alleviate many of the practical barriers that limit the scope and quality of developmental research. If we could run studies with kids in their homes anywhere in the world, we could reduce the degree of sampling bias inherent in lab testing, in addition to recruiting families with rare diagnoses and being able to observe more natural interaction. If we could bypass the bottleneck of recruiting and testing participants, we could more easily measure graded effects and look at change over time or individual differences. And—most importantly but least flashily—we could adequately power our studies so that other researchers could productively build on our work.

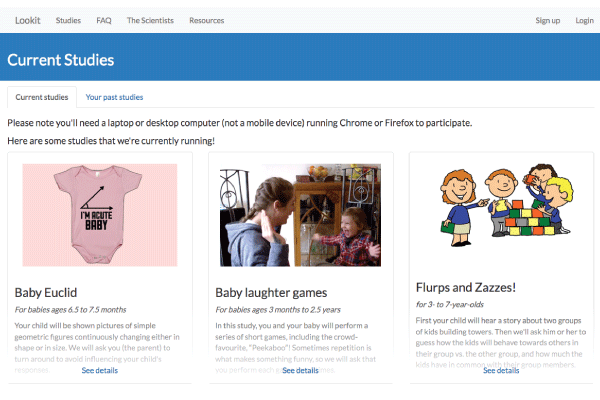

In what started as a small side project, I’ve been working on a platform called Lookit that allows developmental researchers to conduct online studies for babies and children. Families participate from home, on their own computers and within their own schedules. Parents can select studies from a variety of labs. To participate, they complete a short activity in the web browser with their child, and webcam video of the child’s responses is sent back to Lookit. We plan to open the platform to other researchers in May, and I welcome researchers to consider joining us.

The Lookit Platform: Current Status and Capabilities

Back in 2015, as a PhD student studying cognitive development at MIT, I completed several test studies on a simple Lookit prototype. These studies established that it was possible to collect developmental data online and code dependent measures, such as preferential looking from the video collected, as reliably as in the lab (Scott & Schulz, 2017). Since then, with a second grant from NSF’s Developmental and Learning Sciences program to expand access to Lookit, I’ve been focusing on scaling the platform to support multiple researchers running their own studies independently, initially in collaboration with the Center for Open Science, and now with our own in-house software engineer.

Lookit is built using Django, a widely used Python-based web framework that provides solid general-purpose functionality, such as a login system. From the researcher interface, researchers can create, edit, and try out studies; start and stop data collection; manage collaborators’ access; confirm consent; download child, demographic, and session data and video; and contact participants.

Study procedures themselves are set up using a library of experiment components (“frames”) and a frame player. There are customizable frames available for typical experiment components, such as consent, instructions, showing videos or storybook pages, and surveys. And there’s built-in support for random condition assignment, counterbalancing, and conditional logic. Researchers specify a study protocol using JavaScript object notation (JSON), a standard human-readable text format, and don’t need to write any code—although there is the option of adding custom frames. When deployed, each study is siloed in its own docker container, using a snapshot of the codebase, to prevent any unintended changes to study functionality after the protocol is finalized.

The Lookit platform fits into a growing ecosystem of tools in related but complementary spaces: Yale’s TheChildLab.com project, for instance, has developed techniques to run scheduled live-interaction studies with children via webcam. Platforms such as JSPsych, LabJS, PsyToolkit, Testable, PsychStudio, and Gorilla offer functionality for building and/or hosting (generally adult) online behavioral experiments to run in the web browser. Prolific, Testable Minds, and Amazon Mechanical Turk offer (adult) participant recruitment and management. Our own custom experiment builder within Lookit has allowed us to support a variety of developmental tasks, which are often more complex than adult test trials (e.g., including more audio and/or video instructions, custom animation, interactive elements); however, as other systems mature, we look forward to being able to integrate other options for experiment creation.

With Lookit under active development, we’ve accepted a limited number of collaborative beta-test studies to make sure we’re focusing on what matters to our eventual users (both researchers and participants). We now have 10 studies from eight institutions in various stages of completion—four actively testing, three completed, and three in preparation at the time of writing. Over the past year alone, more than 800 families have registered, and children from 41 US states speaking 20 different languages have participated. Data quality is excellent; the platform’s video quality and general reliability alike have improved substantially since initial studies using the prototype. Additional comparisons with in-lab data, and the first novel results, are coming soon from our beta testers.

Launch and Vision

We’re targeting May 2020 to officially “launch” Lookit, making it available free of charge to anyone to use for their own research. (Stay up to date on our progress toward launch via Github Projects: github.com/orgs/lookit/projects/.) Researchers will need to sign a general institutional agreement with MIT, developed by MIT’s lawyers and modeled after the Databrary agreement, that allows researchers to access and contribute to a shared repository of video from developmental studies. Researchers will also be responsible for obtaining approval from the institutional review board (IRB) at their own institutions.

Our aim is to provide infrastructure that enables a wide range of researchers to creatively and productively address their own questions. As more researchers run studies on the platform, we hope they will give back by participating actively in the community: trying out one another’s studies, offering advice, adding to the documentation, and filing bug reports and feature requests to provide input on development.

While all of Lookit’s code is open source and publicly available, there’s substantial benefit to researchers in banding together to use and support a particular instantiation of such a tool. We all benefit from economies of scale in hosting, feature development and software maintenance, and IRB and legal coordination. And we can make Lookit a place with constantly refreshing interesting content, which will help with recruiting and engaging participants.

Unique Challenges of Collecting Developmental Data Online

While the basic idea behind Lookit is straightforward, it’s taken the better part of a decade to get from an idea to a platform. There have certainly been some project-management and administrative hurdles—we are, after all, proposing to let other people collect video of children on the internet using MIT infrastructure. But for the most part, we’ve come up with ways to handle the challenges of creating and administering a platform for the field at large. Here are some of the solutions we’ve been working on:

Collecting video in the home

Collecting video online and in participants’ homes introduces a few ethical and privacy considerations beyond those of typical in-lab videotaping. As we plan to scale up, a lot of our effort has been centered on building safeguards for responsible handling of participant data.

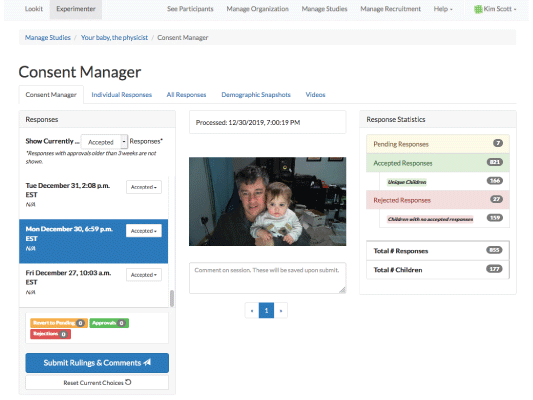

First, we need to be extremely certain that all families agree to webcam videotaping and that we appropriately handle those cases in which we cannot be certain. Unlike in the lab, it’s possible for someone to end up on Lookit and try to “click through” the study out of curiosity without understanding what’s going on. Families on Lookit consent to participate by making a recorded verbal or signed statement, which ensures they can understand written English and know they’re being recorded. To minimize the potential for human error in the consent process, a “consent manager” view allows researchers to confirm consent videos in the web browser. That confirmation is stored in the database, and only responses with confirmed consent are available to view or download.

Second, video recording in the home is more likely to incidentally capture other family members, personal information, and uncensored interaction. At the end of all Lookit studies, a standard “exit survey” component lets participants select acceptable uses for their video, including whether it can be shared with Databrary. Parents also have the (very rarely used) opportunity to withdraw video from the study altogether. Withdrawn video is automatically made unavailable and deleted from our servers. Providing privacy options at the end rather than the beginning of the study allows parents to make an informed decision about sharing video on the basis of what happened throughout the study.

Supporting data analysis and sharing workflows while minimizing potential for unintended disclosure

One of our central engineering goals is to make it as easy as possible to download, analyze, and share data replicably and responsibly. Researchers can download CSV files with study data as well as automatically generated data dictionaries. Potentially identifying information, such as a child’s birthdate or exact age at time of participation, is technically accessible, but by default it is omitted from response data downloads. By setting this default behavior, we “nudge” researchers toward planning for eventual data sharing from the start by keeping sensitive data separate. To avoid each lab having to reinvent the wheel for common workflows, Lookit also provides processed data that can be published without redaction. For instance, researchers can download rounded ages as well as child IDs unique to their own studies to avoid unanticipated combination of data across studies.

A shared reputation

A central platform shared across labs not only allows researchers to benefit from economies of scale in engineering and recruitment, but it also means that Lookit studies largely share a common reputation among families. Lookit studies are by design somewhat standardized to reduce cognitive load for participant families, further reinforcing the connections among studies. If another lab’s study is really fun, works smoothly, and gets positive media attention, you benefit as well. On the other hand, you are also affected if another lab accidentally discloses personal information or conducts an ethically questionable study. Lookit’s shared reputation is a large part of why our terms of use reserve the right to review studies ahead of posting for technical and ethical issues and why we have more conservative guidelines than most IRBs on what constitutes “deception” that must be disclosed.

Online recruitment

One of the biggest remaining hurdles is scaling online recruitment. Developmental labs have built up substantial expertise around recruiting in-person participants, but they generally have very little experience recruiting online participants. We have had a few undergraduate students experiment with organic and paid social-media advertising; our primary conclusion is that we should not expect the platform to magically attract thousands more parents without concerted—and skilled—efforts toward advertising and media outreach. On the bright side, recruitment is empirically not a zero-sum game for the multiple researchers using Lookit: Advertising one study has substantial benefits for the other studies on the site.

Tips for Translating Your Study to an Online Environment

Focus on communication

In my experience, the biggest challenge developmental researchers face in moving studies onto Lookit is nontechnical: communicating clearly with parents despite the noninteractive setting. Although we’re great at making studies intuitive and clear to young children, we’re used to explaining the goals of and instructions for a study to parents in a social context where we can adapt on the basis of their cues and questions.

Trying to cover these same bases without an interactive setting is hard. It’s worth the time, however, to really polish parent-facing study overviews, debriefings, and instruction text and/or verbal instructions. Even the study design itself may need a bit more signposting. Transitions that might be fine in the lab—for instance, proceeding from the last experimental test trial to the exit survey and debriefing—can feel more abrupt online.

Make it fun!

Online, families will be implicitly comparing a study to a kids’ app or video game (by comparison, the closest equivalent for a lab visit is a trip to the doctor!). Although it’s not realistic to expect a developmental experiment to be quite as engaging as a top-selling app, sometimes delivery or stimuli are drier than they have any scientific need to be, just out of habit.

When researchers record audio or video, they should ask themselves these questions: Is there actually any scientific need for a flat delivery? Will adding silly pages or a fun-to-say chorus to your storybook actually invalidate the interpretation of kids’ answers? Is there a reason parents can’t have an active role in asking questions or giving feedback?

Think creatively about the value for families

For families deciding whether to participate, the intangible rewards can be just as important as the compensation provided. We’re all just getting started figuring out what the possibilities are online, but here are some nonmonetary “rewards” to consider:

- A cute video still from the study. For example, our study that involved dense sampling produced a video “collage” of clips from the 12 sessions.

- Insight into a child’s particular behavior or unique strengths

- Some social connection via study “feedback” on the platform, even if it’s just your opinion that a particular child’s mind is fascinating

- Personal advice or encouragement about common parenting questions related to your research, or customized references to educational or parenting resources

Interested in Joining Us?

Again, we’re planning to fully open up the platform in May. But there are a few steps researchers can take ahead of time to hit the ground running.

Do I have to be a “techie”?

The short answer is no. Researchers can create an experiment without writing a single line of code; they do not need to know any programming languages or to have run studies online before. Study specification isn’t drag-and-drop (yet), but what needs to be edited to build a study is a simple, human-readable text document that says what frames to use in what order.

That said, it’s important for researchers to read the documentation and keep an open mind about their own abilities, such as not assuming that an error message is beyond them to figure out. Running experiments with babies on the internet might by definition create a techie person.

Will it work for my study? What dependent measures can I collect?

In terms of study protocol, researchers can do most of what one can imagine happening in a web browser. It’s actually easier to focus on what isn’t likely to work yet: live coding of looking measures; precise (frame-level) timing of videotaped responses, such as in some predictive-looking studies; strict control over the environment (e.g., precisely controlling lighting in the room or distance from the screen); and making live experimenter interaction or particular bespoke objects key to the experimental design.

Some studies may not be a good fit for the platform because of its shared reputation. For instance, we don’t recommend exposing preschoolers to “the debate” about climate change or making a study that is deliberately frustrating for parents.

Currently, our beta testers are collecting or planning to collect data from newborns through teenagers. They’re using looking measures, verbal or pointing responses, laughter, surveys, and performance on custom games. If researchers are not sure if something’s possible, they can just ask us!

A Platform for Everyone

At this point, we’ve only scratched the surface of the possibilities for online developmental research: Initial beta-testers have largely focused on direct adaptations of in-lab studies to verify that their basic protocols will work. I’m especially eager to start supporting the applications I haven’t yet imagined and the (potential) researchers who wouldn’t otherwise be running developmental studies at all because they lack resources.

I hope you’ll join us this spring!

Here’s How to Get Started

Step 1: Get your legal ducks in a row

The first step if you’re interested in using Lookit is to ask your institution to sign the Lookit Access Agreement. You can find the agreement and more information at github.com/lookit/researchresources/wiki/IRB-and-legal-information.

We recommend getting started on this piece right away. It should only take a few minutes, but your institution may take a few months to return it. You just need to email the form to someone at your institution who can act as an “authorized institutional representative.”

Step 2: Learn to set up your study

The best way to get up to speed on Lookit is to complete the tutorial included in the documentation at lookit.readthedocs.io/en/develop/tutorial.html. You’ll learn how to access the Lookit platform and community; get comfortable editing and troubleshooting an example study; walk through setting up a realistic study from scratch; and practice coding consent and interpreting downloaded data. You’ll even learn to add to the documentation yourself (I know, thrilling!), in case you find something missing.

References and Resources

Scott, K., & Schulz, L. (2017). Lookit (part 1): A new online platform for developmental research. Open Mind, 1(1), 4-14.

Lookit wiki: github.com/lookit/research-resources/wiki

Lookit documentation and tutorial: lookit.readthedocs.io/en/develop/

Lookit codebase and development plans: github.com/lookit/

Comments

Hi, Kim and colleagues! We are a team of researchers at Westerns Sydney University in Sydney, Australia who are hoping to run a series of studies on foundational abilities for phonological abstraction in infants <6 months, with longitudinal followup to 18 months to investigate predictiveness of the early abilities for later aspects of early language development.

We are working our way through the LookIt tutorial and FAQs for researchers re: signing up and designing and then posting our studies via LookIt. Im' guessing we may have additional questions as we move through, but for now we have two questions and hope you or your team will b able to answer:

1. The latest posting on your main site is "May 4, 2020For even more ways to participate in science with your kids at home, check out the newly launched site Children Helping Science." Does this mean that LookIt has now been launched as well for other researchers to submit their studies to run via LookIt? Following your online researcher instructions etc, of course!

2. is LookIt amenable to longitudinal studies, i.e., multiple planned participations at different ages by the same infant? We think so, but just wanted to confirm that there are not any constraints to longitudinal studies with email reminders to parents if we are running the studies on our home university server and collecting emails (Option C looks most suited for us we think).

Thank you for any advice/confirmations you can send at your convenience!

Cheers,

Cathi Best, Denis Burnham and Anne Cutler, MARCS Institute, Western Sydney University, Australia

Hi Cathi!

First, yes, longitudinal studies are very welcome on Lookit. We see the ease of follow-up sessions as one of the big benefits of moving research online! Our launch date was slightly delayed due to the coronavirus crisis – we’ll be announcing an updated date shortly. (There’s up-to-date info on the wiki and on Slack.)

ChildrenHelpingScience.com is actually an entirely separate endeavor – it’s a website with links to studies being run online, but doesn’t provide any of the infrastructure for collecting data. Some of our beta testers have added links to their Lookit studies there. Running studies on your own university server would be a separate approach from running them on Lookit, but any online study can be submitted to index on ChildrenHelpingScience.

The best way to get in touch with questions is via the Lookit Slack workspace, where other researchers may also be able to chime in with their experiences and advice. Instructions for access are at the start of the tutorial, but do let us know if you have any trouble!

all the best,

Kim

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.