Psychological Science Submission Guidelines

Updated 6 December 2024

Psychological Science welcomes the submission of papers presenting empirical research in the field of psychology. Preference is given to papers that make an important contribution to psychological science, broadly interpreted to include emerging as well as established areas of research, across specialties of psychology and related fields, and that are written to be relevant for and intelligible to a wide range of readers. Please see the most recent editorials (Hardwicke & Vazire, 2023 and Vazire 2023) for more information about the latest developments at Psychological Science.

Submission of Manuscripts

Manuscripts should be submitted electronically to the Psychological Science submission site, http://mc.manuscriptcentral.com/psci. Before submitting your manuscript, please be sure to read the submission guidelines below. You may also want to consult the Contributor FAQ.

Read the latest editorial policies from the APS Publications Committee.

Quick Links

- Manuscript Review Process

- Preparing Your Manuscript

- Contributor FAQ

- Accepted Manuscripts

Manuscript Review Process

Three-Tier Review

For an overview of the peer review process, see Questions 1 and 2 of the Contributor FAQ. For most submitted manuscripts, at least two members of the editorial team read the manuscript before an initial decision (to reject the submission without review, or to send it out for review) is made. In this initial review, manuscripts are anonymized as to authors and originating institutions. To facilitate this approach, authors will be asked to upload an anonymized version of the submission. If both readers decide the paper is unlikely to be competitive for publication, then the paper is rejected without review (“desk rejected’). Some common reasons for desk rejection include: narrow scope, poor writing, insufficient methodological rigor, insufficient transparency, overclaiming or exaggeration, mismatch between the research aims and the research design, mismatch between the empirical results and the conclusions, signs of flexibility in data collection or analysis, and high risk of statistical inference error (e.g., false positive or false negative, inflated effect size estimate).

If either reader evaluates the paper as having a reasonable likelihood of ultimately being accepted for publication in the journal, then it is sent to two or more external reviewers for extended review. An Associate Editor usually oversees this process and writes the subsequent decision letter (accept, reject, or revise and resubmit).

Once the initial review is completed, authors are notified by e-mail that their manuscript either (a) has been rejected on initial editorial review or (b) has been sent to outside experts for extended review (the second tier of review). Manuscripts rejected after either initial or extended review will not be reconsidered unless the responsible action editor has invited resubmission following revision (see Question 16 in the Contributor FAQ).

Upon submission, authors will be asked to identify a relevant editor whom they recommend for handling of their submission. Note that only the Senior and Associate Editors (and the Editor in Chief) handle submissions, STAR Editors do not handle submissions. Authors also have the option to recommend one or more reviewers when submitting a manuscript. However, these recommendations should exclude all authors’ former mentors and mentees, current colleagues at the same university, current or recent (within the past four years) collaborators, and anyone else who would reasonably be perceived as having a conflict of interest with any of the authors. Please keep in mind that the editor will consider these recommendations but cannot guarantee that they will be honored.

After extended review, if the Action Editor deems the manuscript suitable for publication in Psychological Science, the authors will be notified that their manuscript has been conditionally accepted and the manuscript will enter the third tier of review, STAR transparency review. Statistics, Transparency, and Rigor (STAR) editors will perform routine transparency checks at this stage, in coordination with the Action Editor. The waiting time during this tier of review can be markedly reduced by authors following best practices for transparent reporting (see Research Transparency Statement) below for more information, and go here for educational resources [link coming]). If capacity allows, STAR editors will also randomly select articles for computational reproducibility checks.

Criteria for Acceptance for Publication in Psychological Science

The main criteria for publication in Psychological Science are general theoretical and empirical significance and methodological/statistical rigor.

- “General” because to warrant publication in this journal a manuscript must be of general interest to psychological scientists. Research that is likely to be read and understood only by specialists in a subdomain is better suited for a more specialized journal.

- “Theoretical and empirical significance” because research published in Psychological Science should be strongly empirically grounded and should address an issue that makes a difference in the way psychologists and scholars in related disciplines think about important issues. Work that aims to only modestly extend knowledge can be valuable but is unlikely to meet criteria for acceptance in this highly selective journal. Note that the emphasis here is on the significance of the aims and design of the research, rather than on the significance of the results.

- “Methodological/statistical rigor” because the validity of methods and inferences are foundational values of science. Science, like the rest of life, is full of trade-offs, and the editors at Psychological Science appreciate that it is more difficult to attain high levels of precision and validity in some important areas of psychology than others. Nonetheless, to succeed, submissions must be as rigorous as is practically and ethically feasible, and must also be frank in addressing limits on their validity (including construct validity, internal validity, statistical validity, and external validity or generalizability). In addition, manuscripts must pass STAR review before being accepted for publication.

Replication studies, generalizability tests, and other verification work can meet these criteria. If a published study is of general interest, has theoretical and empirical significance, and there is appreciable uncertainty about the results, then a high quality study testing the replicability or generalizability of the effect may also meet the criteria for publication.

The journal aims to publish works that meet these three criteria in a wide range of substantive areas of psychological science. Historically, experimental fields, and especially cognitive and social psychology, have been dominant in this journal, and research participants often are from a restricted range of the world’s population. Moreover, the majority of articles published in the journal are authored by scientists from the United States. The editors encourage submissions from a broader range of research designs, including observational, longitudinal, descriptive, and qualitative or mixed methods, and from a broader span of areas within psychological science, including, for example, biological psychology, cognitive and affective neuroscience, communication and language, comparative, cross-cultural, developmental, gender and sexuality, and health (and this is not intended as a comprehensive list). The editors also encourage submissions of work with populations that are underrepresented in the psychology literature, as well as of submissions that take psychological science into “the wild”—the natural contexts in which we live—or whose designs give special attention to the realism and authenticity of the procedures, stimuli, measures, and materials. The editors are also eager to receive submissions of work conducted by psychological scientists from around the world.

Submissions centered on clinical science that meet the criteria outlined above will be considered, but many clinically oriented manuscripts are likely to be of primary interest to clinicians and hence are more appropriate for Clinical Psychological Science. Similarly, works with a primary focus on methods and research practices are generally better suited for Advances in Methods & Practices in Psychological Science, yet the editors are open to considering methodological manuscripts of extraordinary generality and importance.

Note that ‘important’ differs from ‘novel’, ‘statistically significant’, and ‘surprising’. The editors welcome submissions that are not novel (e.g., a direct or close replication), and/or not statistically significant (e.g., evidence of absence; or inconclusive results, when more evidence would be difficult to collect), provided the research is rigorous and a strong case is made for the importance of the work. Moreover, submissions that overclaim (e.g., draw conclusions that are not well-calibrated to the evidence or to the strengths and limitations of the research design) are more likely to be rejected. Authors are expected to make a compelling but well-calibrated case for the importance of the research, and the editors will be open to many different ways a contribution can be important (e.g., theoretical or applied value, methodological innovation, value of the data, etc.).

Journals of the Association for Psychological Science (APS)

Psychological Science does not compete with other journals of APS, including Advances in Methods and Practices in Psychological Science, Clinical Psychological Science, Current Directions in Psychological Science, Perspectives on Psychological Science, and Psychological Science in the Public Interest. The journals vary in terms of domain and manuscript formats. Manuscripts rejected by another APS journal on the grounds of quality (e.g., flaws in methodology, data, or concept) are not eligible for consideration by Psychological Science.

Preparing Your Manuscript

Article Types

Research Article. Most of the articles published in Psychological Science are Research Articles. Research articles make empirical and theoretical contributions that propel psychological science in substantial and significant ways. Novel studies, replication studies, and extension studies are all welcome, so long as they meet the criteria outlined above. Meta-analyses and other forms of evidence synthesis are typically not considered. The description and word limits of the sections of Research Articles can be found below.

Abstract: All Research Articles must include a 150-word abstract that identifies the sample sizes and participant populations on which the research was conducted, and any important limitations of the research design. The abstract does not count toward the word limit.

Introduction, Discussion, Footnotes, Acknowledgments, and Appendices: These sections may contain no more than 2,000 words combined. Authors are encouraged to be concise and focused in the Introduction and Discussion sections to keep them as brief as possible while also establishing the significance of the work. This word limit does not include the Abstract, Method and Results sections (except footnotes), cover page, or reference list.

Method and Results: These sections of Research Articles do not count toward the total word limit. The aim of unrestricted length for Method and Results sections is to allow authors to provide clear, complete, self-contained descriptions of their studies. But as much as Psychological Science prizes narrative clarity and completeness, so too does it value concision. In almost all cases, an adequate account of method and results can be achieved in 2,500 or fewer words for Research Articles. Methodological minutiae and fine-grained details on the Results—the sorts of information that only “insiders” would relish —should be placed in Supplemental Files, not in the main text. However, a reader who reads only the main text should not come away with any misconceptions or major gaps in their understanding of the method and results. Moreover, all details of the method and analyses necessary for an independent researcher to replicate the study must be included in either the main text or the Supplemental Files.

Authors should include in their Method sections (a) description of the sample(s) selected for the study and an explanation of the basis(es) for the composition of their samples (whether the sample was selected for specific theoretical or conceptual reasons, is a sample of convenience, etc.); (b) the total number of excluded observations and the reasons for making the exclusions (if any); and (c) an explanation as to why the sample size is considered reasonable, supported by a formal power analysis, if appropriate. Authors also should include confirmation in their Method section that the research meets relevant ethical guidelines. Hybrid “Method & Results” sections are disallowed for any type of submission.

Discussion: In the Discussion (or General Discussion), authors should explicitly consider the limitations of their research design (including but not limited to explicit consideration of the limits on the generalizability of their findings) and the most important limitations should be reflected in authors’ conclusions and abstract.

Many Research Articles contain two or more studies. Such submissions may include “interim” introductions and discussions that bracket the studies, in addition to an opening “general” introduction and a closing “general” discussion. Authors who opt for this sort of organization should bear in mind that the aforementioned word limits on introductory and Discussion sections include both interim and general varieties. Any combined “Results and Discussion” sections will be counted toward the word limit.

Narrative material that belongs in the Introduction or Discussion section should not be placed in the Method or Results section, within reasonable limits. Thus, for example, authors may include a few sentences to place their findings in context when they are presented in the Results section. However, excessive packing of a Method or Results section with material appropriate to the Introduction or Discussion will trigger immediate revision or rejection of the manuscript.

References: Authors are encouraged to cite only the sources that bear on the point directly, and to refrain from extensive parenthetical lists of related materials, keeping in mind that citations are meant to be supportive and not exhaustive. As a general rule, 40 citations should be sufficient for most Research Articles. However this is not a hard-and-fast limit, and editors have the flexibility to allow more references if they are necessary to establish the scientific foundation for the work.

Short Report. As of May 15, 2020, Psychological Science is no longer considering Short Reports for publication. Manuscripts that previously would have been submitted in this category should now be submitted as Research Articles.

Preregistered Direct Replication. As of January 1, 2024, Psychological Science is no longer considering Preregistered Direct Replications (PDRs) for publication. Replication submissions can be submitted as Research Articles or as Registered Reports, and they will be evaluated by the same criteria (general interest, theoretical and empirical significance, methodological/statistical rigor) as other submissions. However, to preserve the self-corrective function of PDRs, high quality direct replications of studies previously published in Psychological Science that are submitted as Stage 1 Registered Reports by an author team that is independent of the original authors will be evaluated only on methodological and statistical rigor (i.e., their general interest value and theoretical and empirical significance will be taken as a given).

Registered Report

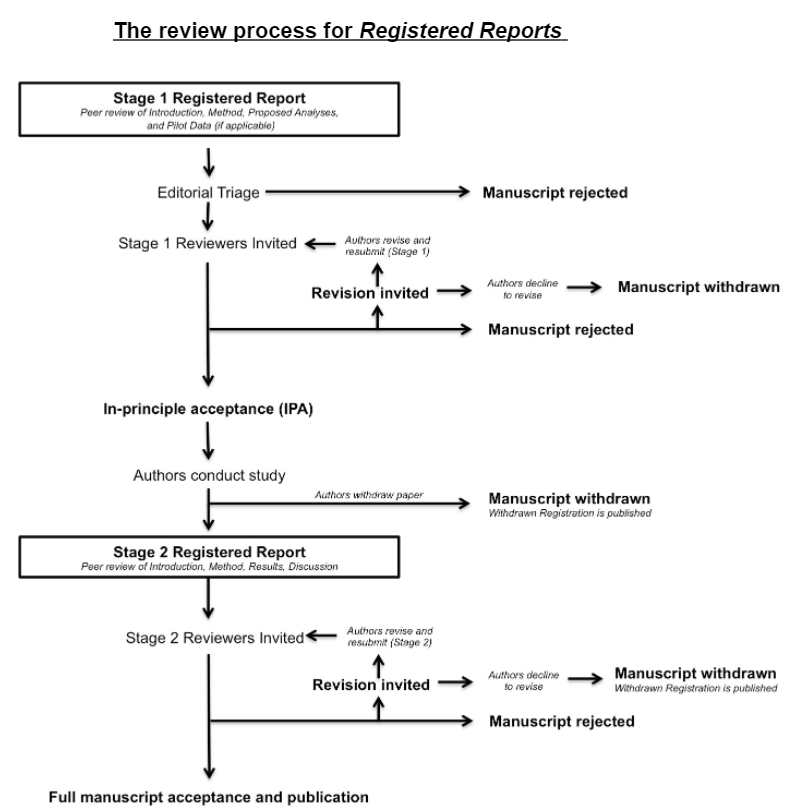

The cornerstone of the Registered Reports format is that a significant part of the manuscript will be assessed prior to data collection, with the highest quality submissions accepted in advance. Replication studies are welcome as well as novel studies. Note that guidelines for the Research Article article type also apply to the Registered Report article type, unless otherwise specified below.

Titles of manuscripts should begin with “Registered Report”. Initial submissions will include a description of the key research question and background literature, hypotheses, experimental procedures, analysis pipeline, a statistical power analysis (or Bayesian equivalent), and pilot data (where applicable). Initial submissions will be triaged by the editorial team for suitability. Those that pass triage will then be sent for in-depth peer review (Stage 1).

Stage 1 review

In considering papers at Stage 1, reviewers will be asked to assess:

- The importance of the research question(s).

- The logic, rationale, and plausibility of the proposed hypotheses.

- The soundness and feasibility of the methodology and analysis pipeline (including statistical power analysis where appropriate).

- Whether the clarity and degree of methodological detail is sufficient to exactly replicate the proposed experimental procedures and analysis pipeline.

- Whether the authors have pre-specified sufficient outcome-neutral tests for ensuring that the results obtained can test the stated hypotheses, including positive controls and quality checks.

Following Stage 1 peer review, the article will then be either rejected or accepted in principle for publication. Following Stage 1 in principle acceptance, the journal will register the approved protocol on the Open Science Framework (https://osf.io/rr) on behalf of the authors. By default, the protocol will be public unless authors request a private embargo until submission of the Stage 2 manuscript.

Following in principle acceptance (IPA), and after receiving confirmation that the Stage 1 protocol has been registered, the authors will then proceed to conduct the study, adhering exactly to the peer-reviewed procedures. When the study is complete, the authors will submit their finalized manuscript for re-review (Stage 2). Pending quality checks and a sensible interpretation of the findings, the manuscript will be published regardless of the results.

Stage 2 review

Authors are reminded that any deviation from the stated experimental procedures, regardless of how minor it may seem to the authors, could lead to rejection of the manuscript at Stage 2. In cases where the Stage 1 protocol is altered after IPA due to unforeseen circumstances (e.g., change of equipment or unanticipated technical error), the authors must consult the Action Editor immediately for advice, and prior to the completion of data collection. Minor changes to the protocol may be permitted per editorial discretion. In such cases, IPA would be preserved and the deviation reported in the Stage 2 submission. If the authors wish to alter the experimental procedures more substantially following IPA but still wish to publish their article as a Registered Report then the manuscript must be withdrawn and resubmitted as a new Stage 1 submission. Note that registered analyses must be undertaken, but additional unregistered analyses can also be included in a final manuscript.

Apart from minor stylistic revisions, the Introduction cannot be altered from the approved Stage 1 submission, and the stated hypotheses cannot be amended or appended. At Stage 2, any description of the rationale or proposed methodology that was written in future tense within the Stage 1 manuscript should be changed to past tense. Any textual changes to the Introduction or Methods (e.g. correction of typographic errors) must be clearly marked in the Stage 2 submission. Any relevant literature that appeared following the date of IPA should be covered in the Discussion.

Results.

The outcome of all registered analyses must be reported in the manuscript, except in rare instances where a registered and approved analysis is subsequently shown to be logically flawed or unfounded. In such cases, the authors, reviewers, and editor must agree that a collective error of judgment was made and that the analysis is inappropriate. In such cases the analysis would still be mentioned in the Methods and the Results section would report the justification for omitting the analysis.

It is reasonable that authors may wish to include additional analyses that were not included in the registered submission. For instance, a new analytic approach might become available between IPA and Stage 2 review, or a particularly interesting and unexpected finding may emerge. Such analyses are admissible but must be clearly justified in the text, appropriately caveated, and reported in a separate section of the Results titled “Exploratory analyses”. Authors should be careful not to base their conclusions heavily on the outcome of statistically significant post hoc analyses.

In considering papers at Stage 2, reviewers will be asked to decide:

- Whether the data are able to test the authors’ proposed hypotheses by satisfying the approved outcome-neutral conditions (such as quality checks, positive controls)

- Whether the Introduction, rationale and stated hypotheses are the same as the approved Stage 1 submission (required)

- Whether the authors adhered precisely to the registered experimental procedures

- Whether any unregistered post hoc analyses added by the authors are justified, methodologically sound, and informative

- Whether the authors’ conclusions are justified given the data.

Reviewers are informed that editorial decisions will not be based on the perceived importance, novelty or conclusiveness of the results.

Word limits, Stage 1: Intro: 1,000 words; Method: no limit

Word limits, Stage 2: Intro: 1,000 words; Method: no limit; Results: no limit; Discussion: 1,000 words.

See guidelines above for Research Article article types for more details about what should be included in each section.

Registered Report with Existing Data

Authors wishing to submit a Registered Report using existing data can do so, provided that they have not yet had access to the data (i.e., they are not the owners of the data, have not been sent the dataset, and have not otherwise been given access to the data). This corresponds to Level 4 in the Peer Community in Registered Reports “levels of bias control”. The titles of these articles must start with “Registered Report with Existing Data”, and the level of bias control (4 or 5) should be reported in the Research Transparency Statement.

Peer review of Registered Reports with Existing Data will follow the same process as for Registered Reports, but of course the design and methods of the study cannot be altered, and so the rigor of the design and methods, and their match to the research questions and aims, will be a major factor in Stage 1 evaluation.

In exceptional cases, we may consider Registered Reports with Existing Data at levels 2 or 3 of bias control, when the quality and value of the research is exceptionally high, and risk of bias can be greatly reduced through other means. Authors interested in this option should complete a pre-submission inquiry using this form.

Word limits, Stage 1: Intro: 1,000 words; Method: no limit

Word limits, Stage 2: Intro: 1,000 words; Method: no limit; Results: no limit; Discussion: 1,000 words.

See guidelines above for Research Article and Registered Report article types for more details about what should be included in each section.

Commentary. Commentaries critique and/or supplement articles previously published in Psychological Science. The major criteria for a Commentary are that it provides a new perspective on the article it is commenting on, and that this new perspective makes an important difference to the interpretation of the target article. Commentaries can include new data and/or new analyses of existing data, but they need not. Authors are not permitted to write Commentaries on articles on which they are an author or coauthor. Commentaries are limited to 1,000 words (includes main text, notes, acknowledgments, and appendices; does not include 150-word abstract, Method and Results sections, or reference list), 20 references, and one figure (no more than two panels) or one table. The title of a Commentary must begin with the word “Commentary”.

References: Authors are encouraged to cite only the sources that bear on the point directly and to refrain from extensive parenthetical lists of related materials, keeping in mind that citations are meant to be supportive and not exhaustive. As a general rule, 20 citations should be sufficient for most Commentaries. However, this is not a hard-and-fast limit, and editors have the flexibility to allow more references if they are necessary to establish the scientific foundation for the work.

The Action Editor typically solicits a signed review of a submitted Commentary from the lead author of the target article that is interpreted by the editor in light of the lead author’s potential conflict of interest (positive or negative), in addition to reviews by two (or more) independent experts. On acceptance of a Commentary that is critical of the target article, the Action Editor typically will invite the lead author of the target article to submit a Reply to Commentary (see below).

Reply to Commentary. Replies to Commentaries allow authors of articles that are targets of commentaries an opportunity to formally respond. Replies to Commentaries are by invitation only, and like the commentaries that initiated them, they are subject to external review (including by the author of the Commentary) and their acceptance is not assured. The major criterion for a Reply to Commentary is that it makes a unique scientific contribution that makes an important difference to the interpretation of the Commentary.. Replies to Commentaries are limited to 1,000 words (includes main text, notes, acknowledgments, and appendices; does not include 150-word abstract, 150-word Statement of Relevance [see Research Article], cover page, Method and Results sections, or reference list), 20 references, and one figure (no more than two panels) or one table. The title of the Reply to Commentary must be “Reply to [title of Commentary]”.

References: Authors are encouraged to cite only the sources that bear on the point directly and to refrain from extensive parenthetical lists of related materials, keeping in mind that citations are meant to be supportive and not exhaustive. As a general rule, 20 citations should be sufficient for most Replies. However, this is not a hard-and-fast limit, and editors have the flexibility to allow more references if they are necessary to establish the scientific foundation for the work.

Table 1. Limits for Psychological Science Articles by Type

|

Article Type |

Word Limit |

Reference Limit* |

Figure and/or Table Limit |

What counts toward the word limit? |

|||

|

Introduction & Discussion |

Method & Results |

Notes, Acknowledgments, Appendices |

Cover Page, Abstract, Statement of Relevance, References |

||||

|

Research Article |

2,000 |

40* |

n/a |

X |

X |

||

|

Registered Report or Registered Report with Existing Data |

2,000 (1,000 for Stage 1) |

40* |

n/a |

X (1,000 words each for Introduction and Discussion) |

X |

||

|

Commentary or Reply to Commentary |

1,000 |

20* |

1 |

X |

X |

||

*These are not hard-and-fast limits and editors have the flexibility to allow more references if they are necessary to establish the scientific foundation for the work.

**For commentaries reporting new data, Method and Results sections are not included in the word count.

Manuscript Style, Structure, and Content

Manuscripts published in Psychological Science must follow the style of the Publication Manual of the American Psychological Association, 7th edition, with respect to handling of the order of manuscript sections, headings and subheadings, references, abbreviations, and symbols. Please embed tables and figures within the main text. For initial submissions, authors may deviate from some of the style requirements (e.g., heading and subheading style, reference format, location of tables and figures). However, invited revisions and final versions of manuscripts must follow APA style. For all article types, for initial review, manuscripts must be anonymized as to authors and originating institutions. To facilitate this approach, authors will be asked to upload a anonymized version of the submission.

Further guidance can be found on our Manuscript Structure, Style, and Content Guidelines page.

File Types

You may upload your manuscript and ancillary files as Word .doc or .docx, as .rtf, as .pdf, or as .tex. If you submit a .tex file, please also submit a PDF file conversion, as the submission portal cannot render .tex files in the PDF proof.

Badges

For manuscripts submitted on or after January 1, 2024, badges will not be offered for open data, open materials, or preregistration. Instead, open data and materials will be required (with exemptions considered on a case-by-case basis, see next section), and preregistration will be a factor in editorial evaluations (see Preregistration section below). The availability of data, analysis scripts, materials, and preregistrations will be reported prominently at the top of each article, in a Research Transparency Statement that will be required upon submission for all empirical manuscripts (see next section) and that will be shared with editors and reviewers for evaluation during peer review.

Research Transparency Statement

Transparency enables the scientific community to thoroughly evaluate, efficiently re-use, and independently verify research. To support these goals, Psychological Science requires authors to make their research as open as possible and as closed as necessary. When full transparency is complicated by legal, ethical, or practical constraints, the journal will work with authors to resolve these constraints as far as possible (see “Transparency constraints and responsible sharing” below). Any unresolveable constraints on transparency must be stated and justified in the published manuscript.

Limits on transparency will be a factor in editorial decisions, with editors weighing the degree of transparency, reasons for non-transparency, and potential costs and benefits of allowing non-transparency. Non-transparency that is motivated by the best interest of science (e.g., to protect participant re-identifiability, data sovereignty for indigenous groups, endangered species, public welfare, etc.) will be evaluated more favorably than non-transparency motivated by the interests of the authors or the data owners.

To streamline transparent reporting, Psychological Science requires all empirical manuscripts (Research Articles, Registered Reports, Registered Reports with Existing Data, and any Commentaries or Replies to Commentaries that include new data or analyses) to include a single Research Transparency Statement including disclosures related to preregistration, availability of materials, data, and analysis scripts, selective reporting, use of artificial intelligence, conflicts of interest, and funding. Authors should build their Research Transparency Statement with our dedicated app (link to app coming) which will save time and ensure that all important information is reported. The app will produce a complete Research Transparency Statement which can be copied and pasted into authors’ manuscripts. The Research Transparency Statement should be a separate section of the manuscript, inserted between the Abstract and the Introduction section. It does not count towards word limits.

Until our app is available, please use the following template to construct your Research Transparency Statement.

TEMPLATE

Research Transparency Statement

General Disclosures

Conflicts of interest: [response]. Funding: [response]. Artificial intelligence: [response] Ethics: [response]

Study One Disclosures

Preregistration: [response]. Materials: [response]. Data: [response]. Analysis scripts: [response]

Study Two Disclosures

Preregistration: [response]. Materials: [response]. Data: [response]. Analysis scripts: [response]

EXAMPLE

Research Transparency Statement

General Disclosures

Conflicts of interest: Bob Vance declares ownership of Vance Refrigeration. All other authors declare no conflicts of interest. Funding: This research was supported by Australian Research Council grant FT118118. Artificial intelligence: No artificial intelligence assisted technologies were used in this research or the creation of this article. Ethics: This research received approval from a local ethics board (ID: 424242).

Study One

Preregistration: The hypotheses and methods were preregistered (https://doi.org/10.1080/) prior to data collection. The analysis plan was not preregistered. There were major and minor deviations from the preregistration (for details see Supplementary Table 1). Materials: All study materials are publicly available (https://doi.org/10.1080/). Data: All primary data are publicly available (https://doi.org/10.1080/). Analysis scripts: All analysis scripts are publicly available (https://doi.org/10.1080/).

Study Two

Preregistration: No aspects of the study were preregistered. Materials: Some study materials are publicly available (https://doi.org/10.1080/). The survey instrument cannot be shared because of copyright restrictions, but is available from Pam & Jim (2018). Data: Some primary data are publicly available (https://doi.org/10.1080/). The interview data cannot be shared because it contains identifying information which cannot be removed; however, it is available on request to the corresponding author. Analysis scripts: All analysis scripts are publicly available (https://doi.org/10.1080/).

Authors must commit to maintaining access to the files (data, etc.) linked to in the Research Transparency Statement. The files must not be modified, or deleted, aside from exceptional circumstances (e.g., accidental release of sensitive information). If authors modify or delete files after publication, they must notify the journal.

We recognize that research transparency norms are evolving and we are always ready to listen to authors’ views. If you have any questions or concerns regarding the requirements outlined in this section, we encourage you to raise them with the STAR team (psych.star.team@gmail.com).

Preregistration

Preregistration can reduce bias, increase transparency, and help readers calibrate their confidence in scientific claims (Hardwicke & Wagenmakers, 2023). Preregistration involves declaring a research plan (for example, aims/hypotheses, methods, and analyses) in a public registry before research outcomes are known (usually before data collection begins). A preregistration is a plan, not a prison: the goal is to make clear what was planned and what was not (DeHaven, 2017). Deviations from preregistrations may be necessary or desirable. When deviations occur (and they almost always do), authors should describe them explicitly, and we strongly recommend using the Psychological Science preregistration deviation disclosure table (based on Willroth & Atherton, 2023) and including it in the first Supplemental File. Authors should consider taking additional measures to reduce the risk of bias incurred by deviations, such as robustness checks (see Box 2 in Hardwicke & Wagenmakers, 2023).

As part of the Research Transparency Statement, Psychological Science requires that all research articles state upon submission whether each reported study was preregistered or not, and which core aspects of the study (research questions/hypotheses, methods, analyses) were preregistered. Authors should explicitly state that preregistration occurred prior to data collection or clarify whether any relevant data existed and/or had been observed. The Research Transparency Statement must contain a publicly accessible persistent identifier (e.g., DOI) to all relevant preregistrations. Authors should state if any deviations from the preregistration occurred (they may refer to them as ‘major’ or ‘minor’) and refer readers to a preregistration deviation disclosure table in the first Supplemental File for details. All major deviations should also be reported clearly in the main text, wherever relevant.

Authors can use any trusted online registry to preregister their research. For beginners, we recommend following the Open Science Framework’s step-by-step workflow (osf.io/prereg). Note that for manuscripts submitted on or after January 1, 2024, “preregistered” badges will no longer be offered, but preregistration status and quality will be one factor in editors’ decisions, when relevant.

Materials

Sharing study materials ensures that research can be thoroughly evaluated and enables independent re-use of research outputs, helping the research community efficiently build upon previous studies. Authors must share all materials necessary for an independent researcher to evaluate and replicate each reported study. This typically includes all stimuli, manipulations, measures, or instruments, as well as details of procedures (e.g., instructions to participants, instructions to experimenters and/or confederates, experimenter and/or confederate scripts, instructions to coders, recruitment materials, consent forms) and custom experimental software. Screen recordings or video protocols can also be helpful ways to communicate study methods.

Upon submission, Psychological Science requires authors to make all original study materials publicly available in a trusted online repository, unless there are reasonable constraints. When unresolvable constraints exist, they must be stated and justified in the Research Transparency Statement. Authors should share files in any original proprietary formats (e.g., Qualtrics or Google Forms) as well as open format equivalents (e.g., plain text, PDF) to maximize accessibility. Materials should be accompanied by an open license (see “Licensing materials, data, and analysis script” below). Materials should be clearly documented to explain what they are and how they can be reused. The Research Transparency Statement must contain a publicly accessible persistent identifier (e.g., DOI) to all shared materials. Note that for manuscripts submitted on or after January 1, 2024, “open materials” badges will no longer be offered.

Data

Data are the evidence that underlies scientific claims: sharing data enables independent verification and reuse, facilitating error correction, evidence synthesis, and novel discovery. Raw data refers to the original quantitative or qualitative recordings, e.g., handwriting in a paper questionnaire, responses on a computer keyboard, or physiological readings. Primary data refers to the first digital (and if necessary, anonymized) version of the raw data, otherwise unaltered. This includes data that is later excluded from the analysis. Raw and primary data may be equivalent, as when online survey software records responses in a digital format or some minimal processing may be necessary to convert the raw data into primary data (e.g., converting handwritten survey responses to digital format, or stripping participant IP addresses from the raw data file). Processed data has been altered in some way beyond digitization and anonymization (e.g., renaming columns, removing variables).

Upon submission, Psychological Science requires authors to make all primary research data publicly available in a trusted online repository, unless there are reasonable constraints. When unresolvable constraints exist, they must be stated and justified in the Research Transparency Statement. Where possible, authors should share data in an open format (e.g., csv) to maximize accessibility. Authors are encouraged to share processed ‘ready-to-analyze’ data in addition to primary data, as it is often easier for other researchers to work with. Data should be accompanied by an open license (see “Licensing materials, data, and analysis script” below) and clearly documented with a data dictionary or codebook file that clearly explains the contents of the data file(s), when the data were collected, and who collected the data. Note that for manuscripts submitted on or after January 1, 2024, “open data” badges will no longer be offered.

Analysis scripts

An analysis script is ideally computational code (e.g., R or Python), or software syntax (SPSS), but can also be detailed step-by-step instructions for analyses performed in point-and-click software. Analysis scripts provide a record of exactly how the analyses reported in the manuscript were performed. This enables an independent researcher to thoroughly evaluate the analyses and verify that the results are computationally reproducible (i.e., repeating the original analyses with the original data yields the reported results). Analysis scripts completely document all of the steps performed to transform the raw data into the primary/processed data (if any), and then into the numerical values reported in the manuscript (including reorganizing, filtering, transforming, analyzing, and visualizing the data).

Upon submission, Psychological Science requires authors to make all original analysis scripts publicly available in a trusted online repository, unless there are reasonable constraints. When unresolvable constraints exist, they must be stated and justified in the Research Transparency Statement. Authors must document all analysis steps necessary for an independent researcher to reproduce all numerical values in the manuscript from the raw data. Authors should ideally share analysis scripts in an open format (e.g., R, Python), though proprietary formats are acceptable for commonly used software (e.g., Matlab, SPSS). Analysis scripts should be accompanied by an open license (see “Licensing materials, data, and analysis script” below) and clearly documented to explain what they do and how an independent researcher should re-use them to reproduce all numerical values reported in the manuscript. The Research Transparency statement must contain a publicly accessible persistent identifier (e.g., DOI) to the analysis script(s). We also encourage researchers to document and share the software environment in which the analyses were performed using tools such as Docker, Binder, or Code Ocean.

Computational Reproducibility

All manuscripts submitted to Psychological Science are expected to be computationally reproducible. That is, a reader should be able to run the authors’ analysis scripts on the authors’ data and reproduce the results reported in the manuscript, tables, and figures. As part of the STAR review after conditional acceptance, we will conduct computational reproducibility checks.

To ensure their work is computationally reproducible, authors should accompany analysis scripts with a README file that provides clear step-by-step instructions for repeating the analyses. Any software dependencies (e.g., package versions) should be identified. Authors should make clear how the output of the analysis scripts corresponds to the results reported in the manuscript. We strongly encourage authors to perform a reproducibility check within their own team before submitting a manuscript, as this will reduce delays at the conditional acceptance stage.

Licensing materials, data, and analysis scripts

Authors are strongly encouraged to share their materials, data, and analysis scripts under a Creative Commons CC0 license, a universal public domain declaration that ensures maximal reuse. Alternative re-use licenses may be acceptable, at the discretion of the Editors. Note that CC0 does not obviate cultural expectations that scholarly credit is given through citation (Hrynaszkiewicz & Cockerill, 2012).

Transparency constraints and responsible sharing

In some cases, legal, ethical, or practical factors can complicate the sharing of some or all materials/data/analysis scripts. Authors are responsible for ensuring that sharing is handled responsibly, while also maximizing transparency as a scientific priority (Meyer, 2018). We recognize that the optimal balance between these responsibilities is not always clear, and we are always ready to discuss potential constraints on transparency with authors.

Authors are expected to take steps to resolve or minimize constraints where possible — for example seeking permission to share from third-party data/materials providers, or sharing the data/materials in a third-party repository that manages restricted access. When transparency constraints cannot be fully resolved, they must be stated and justified in the Research Transparency Statement. Supporting documentation should be provided where possible (for example a data use agreement or letter from the local ethics board outlining why the data/materials cannot be shared publicly).

If authors are using data/materials/analysis scripts from a third party source, they should seek permission to re-share those files alongside the current manuscript. If re-sharing is not possible, authors should clearly state in the Research Transparency Statement how other researchers can obtain the resources. Authors should also use appropriate citation standards to refer to any third party source in the text and reference section. Citation to third party resources should be accompanied by a persistent identifier (e.g., DOI).

Authors should also ensure that shared datasets are suitably anonymised and do not contain personally identifiable information, such as names, addresses, birth dates, IP addresses, emails, or recruitment platform identifiers (e.g., Prolific or MTurk IDs).

Funding disclosures

Any sources of funding related to the research or manuscript must be disclosed. If there are no funding sources, this must be stated explicitly.

Conflict of interest disclosures

All authors must declare any conflicts of interest related to the research or manuscript. If there are no conflicts of interest, this must be stated explicitly. Conflicts of interest include not just financial conflicts, but any author’s role, relationship, or commitment that presents an actual or perceived threat to the integrity or independence of the research or its publication.

Artificial intelligence disclosures

Authors must disclose in the Research Transparency Statement whether they used any artificial intelligence (AI) technologies, such as large-language models (e.g., ChatGPT) during the research or production of the manuscript. The Editors and reviewers will judge whether the use of AI is appropriate.

Statistics

Psychological Science encourages authors to consider the statistical approaches best suited to their aims. The editors recommend the use of a range of approaches, including hypothesis testing and estimation approaches. Among others, frequentist (e.g., Null Hypothesis Significance Testing) or Bayesian approaches (e.g., Bayes factors) as well as machine learning can be appropriate when carried out with care and reported transparently.

When conducting multiple statistical tests on the same research question, authors should take into account the increased risk of false positive results, and correct for multiple comparisons where appropriate (see, e.g., Lakens, 2020).

For synthesizing evidence across multiple studies in the same manuscript, authors should be mindful of the increased risk of bias with common “internal meta-analysis” approaches (Vosgerau et al.).

Authors must include effect sizes (standardized or unstandardized) and a measure of uncertainty (e.g., confidence intervals) for their major results. In addition, authors should report distributional information for their key variables, ideally in figures (e.g., box plots with individual data points added). Fine-grained graphical presentations that show how data are distributed are often the most transparent way of communicating results. Please report and plot confidence intervals instead of standard deviations or standard errors around means of dependent variables, because confidence intervals convey more useful information.

Authors should investigate the extent to which statistical and/or causal assumptions of the model are met, and discuss implications of unmet assumptions.

Any claim about the absence of, for example, differences or associations must be supported by appropriate inferential statistics. For example, frequentist equivalence testing or Bayes factors (with appropriate equivalence margins or prior distributions, respectively) are well-suited approaches. When no supporting inferential statistics are reported, care must be taken to clearly communicate the absence of evidence.

Reporting Style for Statistical Results

Please report test statistics with two decimal points (e.g., t(34) = 5.67) and probability values with three decimal points. In addition, exact p values should be reported for all results greater than .001; p values below .001 should be described as “p < .001.” Authors should be particularly attentive to APA style when typing statistical details (e.g., Ns for chi-square tests, formatting of dfs), and if special mathematical expressions are required, they should not be graphic objects but rather inserted with Word’s Equation Editor or similar.

Guidelines for Reporting Neuroimaging Data

Studies involving neuroimaging methods typically entail much larger numbers of measurements than those found in behavioral research, and require specialized frameworks for preprocessing and statistical analysis. The Organization for Human Brain Mapping has developed a set of documents outlining best practices for the reporting of these data types, which we recommend that authors follow. We refer authors to Nichols et al., 2016 and Pernet et al., 2020 for useful discussions of best practices in reporting of MRI and EEG/MEG research respectively. Checklists for methods reporting are available for MRI (Table 4) and EEG/MEG (Tables 1 and 2). All items listed as mandatory in those checklists should be reported in the main text or Supplemental Files.

Pre-registration of neuroimaging studies can be challenging due to the complexity of the methodology and data analysis, but authors are encouraged to pre-register at least a minimum set of details including: planned sample size, inclusion/exclusion criteria, planned hypotheses, anatomical regions of interest, and methods to be used for multiple comparison correction.

All whole-brain neuroimaging analyses must employ principled methods for the correction for multiple comparisons. For region of interest (ROI)-based analyses, the process of ROI selection should be clearly stated in the main text; in general, ROIs should be selected prior to any analyses of the data and preferably pre-registered. Reports of ROI analyses should include effect size estimates. Any ROI-based analyses should be accompanied by whole-brain analyses for the same effect. A complete table of activation coordinates, together with their associated statistics, should be provided for each hypothesis test; such tables should typically be put in SOM.

The strongest submissions will report results for appropriately large samples and/or report a pre-registered replication study. There is no general rule for sample sizes, as they depend on the nature of the hypothesis being tested. For instance, a study concerning correlations between brain structure/function and behavioral measures will generally require samples in the hundreds to thousands of individuals due to small effect sizes (Marek et al, 2022) and low reliability (Elliott et al., 2020), whereas smaller samples are likely suitable for studies of task-specific brain activation, depending upon the effect size of interest. Prospective power analyses for fMRI (Mumford, 2012) require unbiased estimates of effect sizes which are difficult to obtain without large prior samples and independent regions of interest; when these are not available, the sample size should be justified and preregistered.

Psychological Science will place emphasis on those functional neuroimaging studies that make a clear and compelling contribution to understanding psychological mechanisms. Therefore, authors should describe a clear linking proposition between the neuroscientific measurements and psychological theory.

Because genetic associations identified using candidate gene approaches have historically been largely irreproducible (Hewitt, 2012), Psychological Science will only entertain candidate gene studies that are sufficiently powered to identify associations of a realistic magnitude at a genome-wide level of significance.

Research Using Machine Learning Methods

Research using machine learning methods should adhere to the Reporting Standards for ML-based Science (REFORMS) reporting checklist (Kapoor et al., 2023). Computer code must be shared for all analyses.

Research Using Computational Models

Research involving the development or use of computational models should follow best practices outlined by Wilson and Collins (2019). Computer code must be shared for any new models that are developed.

StatCheck

Authors who use null hypothesis significance testing should run their manuscript through StatCheck — a statistical “spellchecker” that can detect inconsistencies between different components of inferential statistics (e.g., t value, df, and p). StatCheck is available in a free online app at http://statcheck.io/.

Author Contributions

Authorship implies significant participation in the research reported or in writing the manuscript, including participation in the design and/or interpretation of reported experiments or results, participation in the acquisition and/or analysis of data, and participation in the drafting and/or revising of the manuscript. All authors must agree to the order in which the authors are listed and must have read the final manuscript and approved its submission. They must also agree to take responsibility for the work in the event that its integrity or veracity is questioned.

Furthermore, as part of our commitment to ensuring an ethical, transparent, and fair peer review and publication process, APS journals have adopted the use of CRediT (Contributor Roles Taxonomy). CRediT is a high-level taxonomy, including 14 roles that can be used to represent the roles typically played by contributors to scientific scholarly output.

These roles describe the possible contributions to the published work:

Conceptualization: Ideas; formulation or evolution of overarching research goals and aims

Methodology; Development or design of methodology; creation of models

Software: Programming, software development; designing computer programs; implementation of the computer code and supporting algorithms; testing of existing code components

Validation Verification, whether as a part of the activity or separate, of the overall replication/ reproducibility of results/experiments and other research outputs

Formal Analysis Application of statistical, mathematical, computational, or other formal

techniques to analyze or synthesize study data

Investigation: Conducting a research and investigation process, specifically performing the experiments, or data/evidence collection

Resources: Provision of study materials, reagents, materials, patients, laboratory samples, animals, instrumentation, computing resources, or other analysis tools

Data Curation: Management activities to annotate (produce metadata), scrub data and maintain research data (including software code, where it is necessary for interpreting the data itself) for initial use and later reuse

Writing – Original Draft: Preparation, creation and/or presentation of the published work, specifically writing the initial draft (including substantive translation)

Writing – Review & Editing: Preparation, creation and/or presentation of the published work by those from the original research group, specifically critical review, commentary or revision–including pre- or postpublication stages

Visualization: Preparation, creation and/or presentation of the published work, specifically visualization/ data presentation

Supervision: Oversight and leadership responsibility for the research activity planning and execution, including mentorship external to the core team

Project Administration: Management and coordination responsibility for the research activity planning and execution

Funding Acquisition: Acquisition of the financial support for the project leading to this publication.

The submitting author is responsible for listing the contributions of all authors at submission. All authors should agree to their individual contributions prior to submission.

In order to adhere to SAGE’s authorship criteria authors must have been responsible for at least one of the following CRediT roles:

- Conceptualization

- Methodology

- Formal Analysis

- Investigation

AND at least one of the following:

- Writing – Original Draft Preparation

- Writing – Review & Editing

Contributions will be published with the final article, and they should accurately reflect all contributions to the work. Any contributors with roles that do not constitute authorship (e.g., Supervision was the sole contribution) should be listed in the Acknowledgements.

SAGE is a supporting member of ORCID, the Open Researcher and Contributor ID. We strongly encourage all authors and co-authors to use ORCID iDs during the peer-review process. If you already have an ORCID iD, please login to your account on SAGE Track and edit the account information to link to your ORCID iD. If you do not already have an ORCID iD, please login to your SAGE Track account to create your unique identifier and automatically add it to your profile. PLEASE NOTE: ORCID iDs must be linked to author accounts prior to manuscript acceptance or they will not be displayed upon publication. ORCID iDs cannot be linked during the copyediting phase.

Preparation of Graphics

The journal requires that for accepted manuscripts, figures be embedded within the main document near to where they are discussed in the text. A figure’s caption should be placed in the text just below the figure. For initial submissions, tables and figures may be placed at the end of the manuscript.

Authors who are submitting revisions should also upload separate figure files that adhere to the APS Figure Format and Style Guidelines. Because the submission should be anonymized, files must not contain an author’s name. Submitting separate, production-quality files helps to facilitate timely publication should the manuscript ultimately be accepted.

Supplemental Files

Authors are free to submit certain types of Supplemental Files for online-only publication. If the manuscript is accepted for publication, such material will be published online on the publisher’s website via Figshare, linked to the article. Supplemental Files will not be copyedited or formatted; they will be posted online exactly as submitted.

The editorial team takes the adjective supplemental seriously. Supplemental Files should include the sort of material that enhances the reader’s understanding of an article but is not essential for understanding the article or evaluating the author’s claims. Supplemental Files should be uploaded during initial submission.

Contributor FAQ

Contributors are encouraged to consult the Contributor FAQ before submitting manuscripts to Psychological Science.

Accepted Manuscripts

OnlineFirst Publication and TWiPS

All accepted manuscripts are published online (OnlineFirst) as soon as they reach their final copyedited, typeset, and corrected form, and each accepted article appears in a monthly print issue of Psychological Science as well as in the digital This Week in Psychological Science (TWiPS), which is distributed weekly to all APS members.