Featured

Truth, Lies, and the Consequences of Science Denial

Chaired by Olivier Klein (far left), The Psychology of Anti-science Beliefs and Attitudes integrated science symposium at ICPS 2023 featured presentations from (left to right) Matthew Hornsey, Jon Roozenbeek, Myrto Pantazi, and Karen Douglas.

The truth bias and repetition • Unpacking conspiracy theories • Jiu-jitsu persuasion • A shot at inoculation

The year 2019 was one for the record books when it came to global organizations raising prescient alarm bells about looming threats to humanity. For example, the World Health Organization (WHO) and the World Economic Forum named climate change among the year’s greatest risks to global health and global financial stability, respectively. WHO and the U.S. Centers for Disease Control and Prevention (CDC), among others, also cautioned that vaccine hesitancy threatened to reverse decades of progress around the world in tackling vaccine-preventable diseases.

In 2020, these concerns became real in ways exceeding many scientists’ worst nightmares. By spring, the world was engulfed in the rapid spread of COVID-19, followed almost immediately by the rapid spread of skepticism toward vaccines that hadn’t even been developed yet. In June, only 71.5% of people in 23 countries expressed willingness to get vaccinated even “if proven safe and effective,” according to an article in Nature.

Devastating evidence of climate change also mounted in 2020, including wildfires that engulfed enormous swathes of the Amazon rainforest, southern and eastern Australia, and the western United States. Yet one poll found that just over half of people in Japan and 66% of people in the United States agreed that human activity contributes to climate change. Moreover 44% of American adults in another survey were extremely or very sure that global warming hadn’t happened at all over the last 100 years, up from 31% in 2013.

What accounts for people’s resistance to science-backed evidence and their vulnerability to misinformation and conspiracy theories? And why have these characteristics become more common in recent years? In an integrative science symposium at APS’s 2023 International Convention of Psychological Science (ICPS) in Brussels, four psychological scientists from the European Union, the United Kingdom, and Australia shared a wide range of research exploring these determinants and trends, along with potential strategies for addressing them.

“Trust in science is a societally important topic as we face major environmental, geopolitical, and health crises,” said Olivier Klein (Université libre de Bruxelles), the program chair. “Yet the level of trust in science is very variable across the world and across time.” Here are some of the findings presented.

The truth bias and repetition

The growth of digital media has created a proliferation of choices in which highly fragmented audiences can get their “news” from countless sources, including those that recirculate and reinforce beliefs that aren’t always based on fact or are even outright misinformation. In her ICPS presentation, Myrto Pantazi (Université libre de Bruxelles and University of Amsterdam) shared research on the cognitive antecedents of misinformation and why people hold antiscientific attitudes.

Pantazi’s research expanded on her past work on the truth bias, or the default tendency to believe information even in the presence of explicit refutations. The new research asked: Could the truth bias surface in the realm of news consumption and explain vulnerability to misinformation?

In a preliminary study, Pantazi asked two groups of students in Belgium and students and others in the United States to read a series of news headlines and rate them as true (e.g., for the U.S. participants, “Inflation Has Gone up Every Year of the Biden Presidency”) or fake (“The CDC Admits Vaccines Cause Strokes”).

The results were inconsistent. The Belgian students appeared to be better at identifying the fake headlines (i.e., correct rejections), “basically exhibiting the reverse of a truth bias,” Pantazi said, whereas the U.S. participants were better at correctly identifying the true headlines (i.e., hits). She attributed these inconsistencies to possibilities including differences in the two samples or a familiarity/repetition-based memory bias.

A second study of two similar groups added the variable of repetition. Half of the headlines were repeated, and, again, the results were inconsistent. But although repetition did not have a clear effect on participants’ true/fake assessments, some of the results did suggest that repetition may result in a greater tendency to not believe true news, which as previous research has suggested may be equally problematic. “This might be at least as worrying as a truth bias,” Pantazi said. “We should look not only at the nefarious effects of misinformation, but also at how skeptical people are toward truthful information.”

Pantazi noted avenues for future research, including the importance of misinformation research that not only looks at how prone people are to believe false information but also how much they disbelieve true information. “This can reveal biases that lead toward mistrust or gullibility,” she said.

Unpacking conspiracy theories

Conspiracy theories are having a moment. Whatever their assertions—e.g., the earth is flat, the government is lying about the safety of genetically modified foods, global warming is a hoax, COVID-19 is a bioweapon developed in a lab to wage war on the west—conspiracy theories often speak to at least one of three psychological motives among the people who hold them, according to APS Fellow Karen Douglas (University of Kent). Epistemic motives seek to reduce uncertainty by obtaining knowledge. Existential motives aim to feel safe and in control. And social motives include the desire to hold one’s self and one’s groups in high regard, which may mean looking down upon others.

Belief in conspiracy theories can also be affected by factors at different levels, Douglas said, such as individual characteristics, intergroup factors such as conflict and prejudice, or nation-level factors such as corruption and inequality.

Although some conspiracy theories may seem harmless, many can have detrimental consequences by directly and negatively affecting science-related attitudes and intentions, Douglas said. Instead of making people feel more certain, they can make them feel less certain, more disillusioned, and more likely to disengage from science.

But what are the consequences for the individuals and groups who share conspiracy theories? What do people think of them?

Douglas and colleagues explored these questions in two studies. In one, participants read a quote attributed to a fictitious politician who either shared or refuted a deep-state conspiracy theory about the country being run by a nefarious “secret network” within the government. Asked to rate this politician on a number of dependent measures, participants in the pro-conspiracy (vs. anti-conspiracy) condition rated the politician as less honest and trustworthy but also more “brave” … “a rogue who might be able to effect change—not necessarily a negative outcome for an individual who’s looking to influence others,” Douglas said.

More crucially, she said, participants’ intentions to vote for this fictitious politician were moderated by their own beliefs in conspiracy theories and by their political orientation. Those with low beliefs in conspiracy theories and low right-wing attitudes were more likely to reject the pro-conspiracy candidate. The opposite held for participants with stronger conspiracy beliefs and stronger right-wing views, suggesting that politicians might benefit by sharing conspiracy theories with certain audiences.

Related content: 2021 Q&A with Karen Douglas

In new research, Douglas and colleagues are exploring how audience characteristics impact the consequences for people who share science-related conspiracy theories. A study in early 2023 asked participants to rate their perceptions of a fictitious scientist. In one condition, the scientist said global warming is a myth intended to enrich climate scientists. In the other, the scientist noted ample evidence of a warming planet and called for more use of renewable energy.

Participants perceived the pro-conspiracy scientist as less honest and credible, among other dependent measures, but, unlike the fictitious politician in the previous study, not more “brave” or likely to effect change. The consequences (e.g., harm to credibility) appeared to be most harmful for the pro-conspiracy scientist when the audience was low in conspiracy beliefs and less to the right in their political orientations. But the consequences for that scientist were less harmful, potentially even beneficial, when the audience was high in conspiracy belief and farther to the right.

“Sharing conspiracy theories has consequences for experts, but this depends crucially on audience characteristics,” Douglas said. Future research will look further at how people perceive individuals who share conspiracy theories and will also examine the effects of conspiracy theories emanating from the political left wing.

Jiu-jitsu persuasion

What can effectively address antiscience attitudes and beliefs? The answer is not simply more and better science education, according to Matthew Hornsey (University of Queensland). “The irony is that we live in a society that’s more educated than any time in human history, and yet significant chunks of the public remain skeptical about the scientific consensus around climate science, vaccinations, and evolution” he said. For issues like these, it’s not clear that information and education alone will be effective. For instance, there’s no evidence that understanding evolutionary theory reduces belief in creationism.

So if most people have been exposed to the scientific evidence for these important scientific issues—and they have, Hornsey said—“it defies common sense to keep repeating the evidence over and over again.” And although myth-busting can sometimes have positive effects on science skepticism, “I think the notion that you can get salvation by just mashing data in people’s faces is a little naïve.”

An alternative explanation for science skepticism is motivated reasoning, in which people have already decided on their conclusion and retro-fit the evidence to suit that conclusion. “For motivated reasoners, the notion of analyzing data is largely a charade designed to reinforce the conclusion they want to reach,” Hornsey said. “Rather than acting like cognitive scientists,” carefully weighing evidence and synthesizing it, people may tend to “behave more like cognitive lawyers, selectively attending to, critiquing, and remembering evidence in a way that’s convenient to the conclusion that they want to reach,” he said. In fact, “in the information-overload era, we’re seeing this renewed trend towards feeling your facts and focusing on your gut feelings and intuition.”

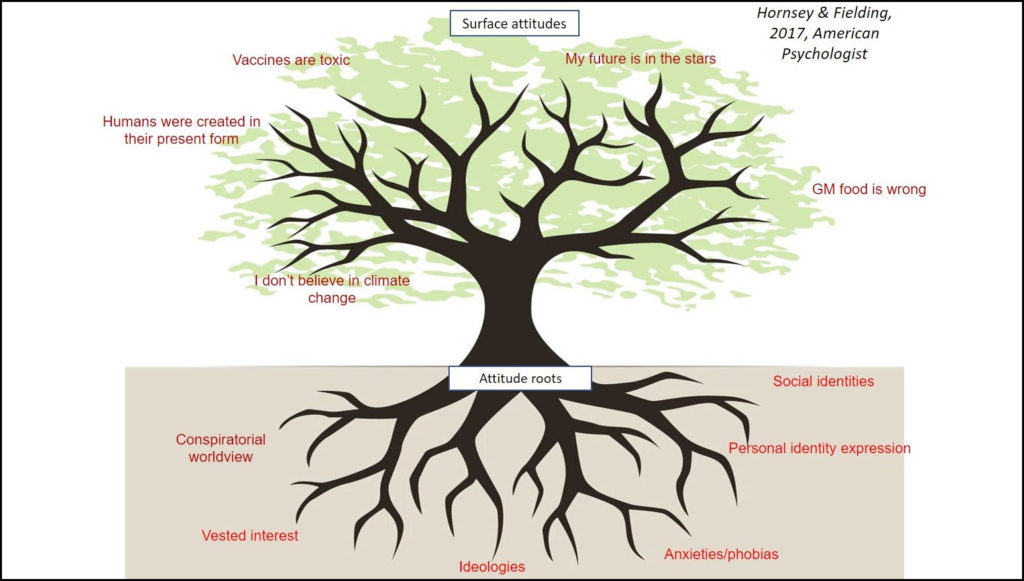

Understanding this reality, Hornsey said, transforms the question: Instead of asking why people reject science, ask why they would want to? He used the metaphor of a tree, in which the branches represent the surface attitudes (what people say), and the roots explore the psychological motivations for these attitudes, from anxieties and phobias to vested interests. The roots “give these surface attitudes strength and allow them to survive even in the face of contradictory evidence,” he said.

According to this model, addressing science skepticism requires screening out the surface attitudes and focusing on the attitude roots. Vested interests, for instance, come into play when the consequences of coming on board with the science are painful or inconvenient. “Smokers have a different relationship with the science around tobacco than nonsmokers,” he said as an example. And in the area of corporate vested interests, “If your livelihood depends on the oil and gas industry, you’re going to have a different relationship with climate science.”

Hornsey uses another metaphor to explain how to use this information to create change: jiu-jitsu persuasion, in which—as with the martial art—the goal is to work with your opponent’s momentum rather than fighting it. “You don’t just ignore people’s motivation,” he said. “You understand their motivation and then come up with persuasion techniques that align with that underlying motivation—that are congenial with it.”

In the area of climate science, for instance, the attitude root that is most powerful in explaining skepticism is the presence of conservative worldviews. In the spirit of jiu jitsu, Hornsey advises making the conservative case for climate mitigation using arguments such as how will it protect national security or protect a traditional way of life instead of focusing on themes of harm and justice, which may only resonate with people on the left. “If you make the case that climate action resonates with conservative values, then suddenly conservatives no longer feel the need to reject the science” Hornsey said.

A shot at inoculation

In the final presentation of the symposium, Jon Roozenbeek (University of Cambridge) outlined research conducted jointly with his colleague Sander van der Linden on innovative interventions intended to effectively “inoculate” people against disinformation. (Learn more at inoculationscience.com.)

In a 2022 interview, van der Linden explained inoculation theory as being “like a traditional vaccine that injects us with a weakened dose of a pathogen, which then triggers the production of antibodies” and confers immunity against future infections. The two-pronged process involves “pre-emptively exposing people to severely weakened doses of misinformation. First, we warn people in advance, and then we refute the misinformation before they even encounter it. This activates their intellectual and psychological immune system.” (Van der Linden’s new book, Foolproof, summarizes much of this research.)

Two major media stories underscore the need for these interventions. In the first, a patently false article from the satirical paper The Onion declaring Kim Jong-Un the “sexiest man alive” went on to be shared by a major Chinese news source as an actual news story. Amusing and bizarre, but no harm done, Roozenbeek noted: “We do not always equate falsehood with harm.”

Similarly, “we don’t always equate truth with harmlessness,” he said. In April of 2021, the Chicago Tribune—a respected news outlet—published an article with this headline: “A ‘Healthy’ Doctor Died Two Weeks After Getting a COVID-19 Vaccine; CDC Is Investigating Why.” Published at a time when antivaccination rhetoric was undermining efforts to stem the spread of the disease, this story went on to become one of the most viral Facebook stories of the year. “Every single word in this headline is true,” Roozenbeek noted. “Yet the implication—namely that the doctor died because he was vaccinated—was not. Phrasing matters.”

Inoculation theory is not a tool of persuasion in and of itself but is designed to prevent unwanted persuasion from happening in the first place, Roozenbeek explained. Recent innovations in this field include research by John Cook (George Mason University) that underscores the importance and scalability of inoculating people against not only specific instances of misinformation but also the manipulation strategies that underlie much misinformation, such as emotionally manipulative language and conspiratorial reasoning. Entertaining, app-based inoculation “games” are one means toward helping people acquire these immunities.

A new game, Roozenbeek explained, aims to take on antiscience beliefs specifically. “Bad Vaxx,” still in development, lets players compete as one of several different avatars representing specific manipulation techniques: emotional storytelling, pseudoscience and fake expertise, naturalistic fallacies, or conspiracy theories. There are two versions of the game, which takes about 10 minutes to play. In one version, the player takes the perspective of an apprentice learning the avatar’s technique. In the other, the player competes against the avatar.

In three separate randomized controlled trials, the researchers found that the results were satisfactory in the sense that they found a meaningful and significant boost in ability to spot vaccine misinformation and to identify the correct manipulation technique in misleading social media content. “But the problem with games (or any lengthy intervention) is that they’re difficult to scale to social media environments, because not everyone wants to sit down and play a 10-minute game,” Roozenbeek clarified.

So, for another study, they created a series of short videos (each about 1 minute long) representing different manipulation techniques used to distract people from the truth. The study had about 1,000 participants and a “pretty huge improvement in discernment,” Roozenbeek said. However, he addressed the caveat that positive lab results don’t always translate to the field and the importance of having a robust lab size.

To that end, a field study on YouTube placed the manipulation videos as ads. After seeing the video, viewers took a simple test to indicate what they had learned. Overall, viewers’ technique recognition improved by about 5%—despite the fact that viewers have no real motivation to pay attention to ads on YouTube.

“There is a meaningful improvement in the ability to correctly identify manipulation techniques if you administer this kind of intervention in the field,” Roozenbeek said. As for how to apply these findings to antiscience beliefs, he shared a new video emphasizing the need to check “experts’” qualifications.

Feedback on this article? Email apsobserver@psychologicalscience.org or login to comment. Interested in writing for us? Read our contributor guidelines.

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.