Easier Done Than Said: Lessons from 6 Years of Preregistration

More than 6 years after APS began encouraging psychological scientists to preregister their research, the practice continues to earn praise from authors who say it makes them think more carefully about their hypothesis and methods, and, ultimately, makes their work stronger. Many authors remain reluctant to preregister, however, for reasons including lack of familiarity with the process or concern that it could be labor-intensive or inhibitory, even preventing them from doing exploratory research.

For a first-hand look at the process and impact of preregistration, the Observer reached out to the authors of several top preregistered studies from APS journals—as determined by number of citations and Altmetric scores. What motivated them to preregister their research? What was their experience in preregistering, in comparison with other research they didn’t preregister? And what benefits, if any, did they receive as a result of their decision to preregister?

Cause—and Effect

With preregistration, scientists specify their plans for a study (e.g., hypotheses, number and nature of subjects, procedures, statistical analyses, predictions) and then post those plans online in a locked file that editors, reviewers, and, ultimately, readers can access. Introduced to the APS journals in January 2014, the practice was embedded in several broader changes in APS publication standards and practices “aimed at enhancing the reporting of research findings and methodology,” wrote Psychological Science Editor D. Stephen Lindsay in an editorial. “The theoretical advantage” of preregistration, wrote Eric-Jan Wagenmakers and Gilles Dutilh later in the Observer, “is that it sharpens the distinction between two complementary but separate stages of scientific inquiry: the stage of hypothesis generation (i.e., exploratory research) and the stage of hypothesis testing (i.e., confirmatory research). By respecting this distinction, researchers inoculate themselves against the pervasive effects of hindsight bias and confirmation bias.”

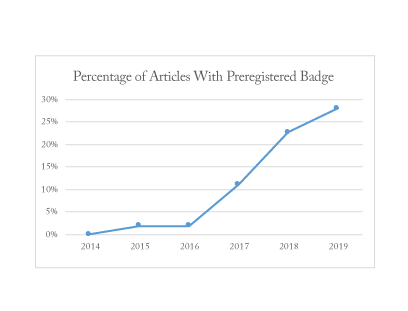

From 2014 through 2019, 43 of 154 eligible articles published in Psychological Science earned the Preregistered badge “for having a preregistered design and analysis plan for the reported research and reporting results according to that plan.” (APS also awards Open Science badges for Open Data and Open Materials.) Two other APS journals that publish primarily empirical work, Clinical Psychological Science and Advances in Methods and Practices in Psychological Science, also encourage preregistration and award badges for it.

“I think preregistration is a really good idea, and more of us should be doing it,” said Erin Heerey, principal author of a 2018 Psychological Science article, “The Role of Experimenter Belief in Social Priming,” that has 244 citations. “When you think about [your methods] in that level of detail and write them down before you do the work, it helps you catch details that reviewers will ask later and plan for those questions in advance.”

Amy Orben, principal author of the widely cited 2019 Psychological Science article “Screens, Teens, and Psychological Well-Being: Evidence From Three Time-Use-Diary Studies” (Altmetric score 1749, 24 citations) also found the experience positive.

“I think preregistration made our study stronger,” Orben said. “We found effects in the opposite direction than we were expecting from the first two data sets we analyzed to generate our hypotheses, and this did not cause too many issues in peer review as we had preregistered our study. Furthermore, it allowed us to showcase a distinct hypothesis-generating and hypothesis-testing framework, which I believe in and want to support.”

What prompted the decision to preregister? For Heerey, of Western University in Ontario, “we did it partly out of curiosity about what preregistration entailed, partly because we knew that given how controversial our findings were turning out to be, we needed to document our predictions clearly and publicly in advance, and partly because a reviewer mentioned it as a way of strengthening our work.”

For Orben, of the University of Cambridge, “it felt like the natural step.” She and her colleague had analyzed two preexisting datasets to identify their hypotheses, and they knew the third data set would be released the following month. “It was just enough time to preregister the hypothesis and analysis plan to then have a strong confirmatory test of our formed hypotheses in place.”

Will Skylark, also of the University of Cambridge, believes another benefit of preregistration is that “it requires considerable thought about what one is actually trying to find out,” said the author of the 2017 Psychological Science article, “People With Autism Spectrum Conditions Make More Consistent Decisions” (22 citations). “Thinking in detail about the implications of different analysis strategies forces one to be explicit about what, exactly, the hypotheses are that one wishes to test, and how one is testing them.” He cited pragmatic reasons as well. “We thought it best to commit to a single, reasonable plan to avoid a plethora of output and the risk of inflated error rates and unconscious ‘cherry picking’ of results,” he said. Further, he and his co-authors speculated that preregistering “would probably be regarded favorably by our peers.”

As to the perception that preregistration is labor-intensive, “that’s not my experience,” said Heerey. “I think it just shifts the work you do from after you have run the study to before. Basically, it means writing the methods section up front—which means that you pretty much have that section of the paper drafted before you run, which makes the process of writing easier.”

Michael Kardas of the University of Chicago Booth School of Business agrees. His 2018 Psychological Science article, “Easier Seen Than Done: Merely Watching Others Perform Can Foster an Illusion of Skill Acquisition,” has 18 citations.

“We preregistered several of our experiments and this wasn’t problematic: It takes a few extra minutes but also prompts you to think more carefully about your hypotheses and your analysis strategy,” Kardas said. “Plus it’s often possible to reuse language from one pre-registration when writing up another, so the process tends to be fairly efficient.”

Orben noted that The Open Science Framework, “with its many different preregistration templates, makes it relatively easy to pre-register and you can even embargo it to keep your registration in the private space until you want to release it.” And while she acknowledged that preregistration is “naturally a process of tying one’s hands, it did not felt particularly inhibiting as I was convinced by the way it will help me test my posed hypotheses.”

Heerey also disagrees with the notion that preregistration can be inhibiting. “You are welcome to explore your data,” she said. “The thing preregistration does prevent is people reporting exploratory findings as if they were main hypotheses. It is often the case that we explore our data (sometimes pilot data that are not preregistered and sometimes additional findings that we have discovered in a preregistered data set) and then conduct another preregistered study in which we specifically predict and examine those effects. Either way, I think this enhances the quality of the work we are doing in the lab.”

Heerey is such a fan of preregistration that she wishes “more journals would emphasize and encourage to a much greater degree the ability to seek peer review PRIOR to data collection. This gives researchers a chance to work collaboratively with reviewers to determine methodology, instead of adversarially”—if, for instance, results don’t match/replicate/confirm previous findings. “I think it would help prevent people from burying nonsignificant results, which can be very easy for reviewers/researchers to explain away or for researchers to simply never write up because they don’t understand why a method that should have generated some finding didn’t do so….”

Not that research practices shouldn’t be nimble for preregistered work. Orben said she did her best “to preregister a detailed analysis plan; however, I found through the peer-review process that the exact analyses could not be adhered to because of the data we acquired. We transparently adapted our analysis strategy, but looking back I wish we would have thought of such contingency planning beforehand.”

References and Related Reading

- Association for Psychological Science. (n.d.). Open practice badges. Retrieved from https://www.psychologicalscience.org/publications/badges

- Association for Psychological Science. (n.d.). Open science. Retrieved from https://psychologicalscience.org/publications/open-science

- Eich, E. (2014). Business not as usual. Psychological Science, 25, 3–6. https://10.1177/0956797613512465

- Wagenmakers, E., and Dutilh, G. (2016). Seven selfish reasons for preregistration. Observer, 26, 13–14. Retrieved from https://www.psychologicalscience.org/observer/seven-selfish-reasons-for-preregistration

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.