Guide Your Students to Become Better Research Consumers

It’s the first day of class. Students read a popular press clipping about a study (Something like, “Eat dessert for breakfast to lose weight” or “Facebook can raise your self-esteem” or “Why we lie”) and give their first responses.

Here’s what they say:

“How big was the sample? Was it representative of the whole population?”

“Was the sample random?”

“We questioned what type of people these were. Why these 63 people?”

“Did they do a baseline measure?”

In my years of teaching psychology, I’ve made an informal study of student responses like these. I’ve learned that students — at least the students at my selective university — are not very good consumers of research information. When it comes to reading about research, my students are very concerned about things that we psychologists are not: They care a lot about whether a sample is large. They care about whether the sample was “random.” They are deeply skeptical about survey research — they think everybody lies.

Beginning college students get a lot wrong, too: They almost always think larger samples are more representative. They call every problem with a study a “confound.” They know that “random” is important in research, but they’re not sure if they mean “random assignment” or “random sampling.” They distrust the experimental post-test only design: They want baseline tests to make sure that “groups are equal.”

At the start of their psychology education, students are not good consumers of information. But they need to be, and we can teach them to be.

Why Are Consumer Skills Needed?

Our undergraduate students won’t become just like us: Most of them (even the best ones) do not want to be researchers when they grow up. In my own classes, only one or two hands go up when I ask who wants to be a researcher. But this doesn’t mean students don’t need research skills — they just need a different set of research skills. Rather than learning to plan, execute, analyze, and write up a research study, students need to learn how to read research and evaluate it systematically.

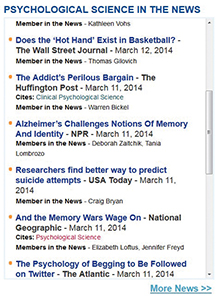

The APS website provides a continually updated list of psychological science studies that have been covered in the popular press. Use this list to find current examples for your students to analyze — both in class and on exams.

In their future roles as parents, therapists, salespeople, teachers, and so on, our students will need a particular subset of critical thinking skills related to reading and evaluating research information. They need to learn why a study with a small sample isn’t necessarily terrible; why researchers rarely conduct experiments on random samples of the population; and why only an experiment can support a causal claim. And importantly, in their future lives, our students won’t necessarily be reading about our research in journals — they’re much more likely to encounter a description of a research study on an Internet server, where a journalist has picked up an interesting study about Facebook, chocolate, ADHD, or bullying. Students should learn to evaluate this content, too.

Finally, good quantitative reasoning skills are an essential part of a liberal arts education. Educated citizens should learn to use data to support arguments, as well as how to evaluate the data used in others’ arguments.

Teach Students to Use Four Validities

I have found it useful to teach students a systematic way to evaluate any research study based on four “Big Validities” — those used by Cook & Campbell (1979) and promoted by other methodologists (e.g., Judd & Kenny, 1981). The big four are:

- external validity (the extent to which a study’s findings can generalize to other populations and settings);

- internal validity (the ability of a study to rule out

alternative explanations and support a causal claim);

- construct validity (the quality of the study’s measures and manipulations); and

- statistical validity (the appropriateness of the study’s conclusions based on statistical analyses).

To evaluate any study they read, students can ask questions in these four categories:

- “Can we generalize?” (External);

- “Was it an experiment? If so, was it a good one?” (Internal);

- “How well did they operationalize that variable?” (Construct); and

- “Did they have enough people to detect an effect? How big was the effect? Is it significant?” (Statistical).

These four validities can be used to evaluate almost any study and can be spiral-taught at any level. Introductory psychology students can master the questions and apply them to simple studies. In research methods courses, almost all topics fit somewhere into this framework. In upper-level courses, students can apply these validities to primary sources they read. Even graduate students can use this framework to organize their first peer reviews of journal submissions.

At the beginning of the semester, students’ ways of thinking about research are like a “before” picture on a home-organization show. Not everything they say about research is garbage, but it’s not all in the right place, either:

- “Individual differences are such a large confound in this study, and that’s why it also has problems generalizing to other situations, since every situation is different.”

- “There are too many confounds. For example, everybody’s mood is different and that affected the data.”

- “This sample size is too small to generalize.”

The four validities serve as organizational boxes into which students can sort disordered thoughts about research. Statements about “random sampling” and “every situation is different” belong in the External Validity box. Questions about “random assignment” and “confounds” go in the Internal Validity box. Students should be reminded that there is a Construct Validity box, too, since they do not spontaneously ask about the quality of a study’s measures and manipulations.

As the semester progresses, students learn that “this sample size is too small” is not, in fact, an external validity concern, but a statistical validity problem (if, in fact, it’s a problem at all). Similarly, they can learn that the common complaint “everybody is different” is not an internal validity problem, as they often surmise, but rather a statistical problem of unsystematic variance and noise.

Perhaps most importantly, the four validities approach teaches the critical thinking skill of setting priorities. A study is not simply “valid” or “invalid.” Instead, researchers prioritize some validities over others. Many psychology studies have excellent construct and internal validity, but poor external validity. But that can be okay — psychological scientists who are testing a theory or trying to make a causal claim typically prioritize internal over external validity.

Teaching Consumer Skills All Semester Long

If we want students to learn to be better consumers of research information (including that covered in popular press), they need practice via multiple learning opportunities. Transfer of learning is demonstrably difficult, so we can’t assume that teaching students to conduct their own studies will simultaneously teach them to be good consumers of other people’s research. We should provide multiple structured activities and assessments that require consumer skills.

At the start of their education, student thinking about research is like the “before” picture on a home organization show. Applying the “Four Validities” helps students organize their questions about research studies into four distinct categories.

To engage students as consumers, use examples of psychological science in the news they read in the real world. Students will be reading about research in the way they’ll most commonly read about it in the future — secondhand. When journalists cover psychology research (and they often do), they include enough basic details of the study to identify whether a particular study was correlational or experimental, what the main variables were, what the sample was, and so on. Students can practice asking about the four validities as they read journalism stories.

Through using journalistic sources, students learn to identify when they don’t have enough information. A journalist may not describe how a researcher operationalized “exposure to Facebook” or “dessert for breakfast.” And journalists rarely indicate which of a study’s findings are statistically significant. It’s good for students to identify such gaps. But be careful — sometimes students assume the worst. Mine commonly say, “It doesn’t say if people were randomly assigned, so the internal validity is poor.” We need to assure students that “I don’t know” can be the correct answer.

To find news articles, consult online treasure troves. The APS Twitter feed, @PsychScience, publishes stories about APS members whose research is covered in the press, and the APS website keeps a running list as well, at www.psychologicalscience.org/MembersInTheNews. Many other Twitter users specialize in finding and announcing good examples of psychological science. In a pinch, you can always go to msnbc.com and click on “Health” or “Science” for classroom-worthy stories.

Consumer skills involve more than the four validities. For instance, students can learn that journalists do not always represent the research they are covering accurately. My students benefit from a group assignment in which they analyze an example of psychological science in the news, comparing the journalist’s coverage to that of the original article.

If you prioritize students’ ability to read journal articles over their ability to read journalistic sources, then similar ideas apply. Students may not understand everything they read in a journal article, but they can get used to coping with a piece of writing they do not fully understand. It takes skill to identify what they know and what they need to understand better. The four validities apply here too. After summarizing an article, students can use the four validities to evaluate four aspects of quality of any journal article.

Finally, good teaching requires authentic assessment. If we want students to be good consumers of information, we should not only require consumer skills in class; we should assess them, too. Educator Dee Fink says “authentic assessments” should present students with the real-world situations in which students will use their skills. If we want psychology students to be able to criticize popular press coverage or read journal articles, our summative assessments should ask them to do this. Put excerpts from such sources on exams. Require students to read and critique journal articles as final projects in research methods instead of requiring students to plan their own studies. Ask students to critically analyze how well a trade book author summarizes a journal article. (Feel free to contact me for sample exam questions or final assignments that focus on consumer skills.)

More and more psychologists are writing successful trade books; journalists and science writers are producing short, engaging pieces on the latest psychological research. Let’s exploit this phenomenon in our classrooms and use it to teach citizens the skills they really need for the future: how to identify and systematically analyze a scientific argument.

References

Barnett, S. M., & Ceci, S. J. (2002). When and where do we apply what we learn? A taxonomy for far transfer. Psychological Bulletin, 128, 612–637.

Cook, T. D. & Campbell, D. T. (1979). Quasi-experimentation: Design and analysis for field settings. Chicago, IL: Rand McNally.

Fink, D. (2003). Creating significant learning experiences: An integrated approach to designing college courses. New York, NY: Jossey Bass.

Judd, C. M. and Kenny, D. A. (1981). Estimating the effects of social interventions. Cambridge, MA: Cambridge University Press.

Lutsky, N. (2008). Arguing with numbers: A rationale and suggestions for teaching quantitative reasoning through argument and writing. In B. L. Madison and L. A. Steen (Eds.), Calculation vs. context: Quantitative literacy and its implications for teacher education (pp. 59–74),. Washington, DC: Mathematical Association of America. Retrieved from http://www.maa.org/Ql/cvc/cvc-059-074.pdf (accessed August 1, 2013).

Mook, D. (1989). The myth of external invalidity. In L. W. Poon, D. C., Rubin, & B. A. Wilson (Eds.), Everyday cognition in adulthood and late life (pp. 25-43). Cambridge, England: Cambridge University Press.

Comments

Excellent article. I also give classes of methodology, and is observed in students many of the misconceptions described. I agree that one of the key tools is to learn about how to find scientific literature, and learn to make a critical reading (for this is essential to work on those misconceptions). In accordance with my colleagues, we believe that stay up to date with respect to the issues of our profession is necessary, beyond the specific activity one perform as a psychologist. Thanks for your contribution.

Another important criterion is whether the article’s author is politically biased. For example, research by John Jost paints Conservatives in a very bad light (i.e., they’re people who “endorse” inequality and are resistant to progressive change). We need to ask ourselves whether results like this are the complete picture. To swing the pendulum, what are the corresponding pitfalls of communists, socialists, and Liberals? What are the merits of right-wing ideologies? With those considerations, Stern Business School Professor Jonathan Haidt does a much better job than John Jost at explaining the psychological underpinnings of Liberals vs. Conservatives precisely because his theories are data-driven and scientifically sound, not ideologically-motivated research that highlights bad qualities of the side you’re not on.

– Simon

Hello! I am attempting to design a course that specifically helps my undergraduate students in nutrition become good consumers of research. I am interested in any of the materials you mentioned that you would be wiling to share as I am trying to get this together for winter quarter. Thank you for your time and consideration.

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.